本文主要介绍webrtc中的波束模块的编译过程,关于波束算法的技术原理将会在下篇文章中介绍。

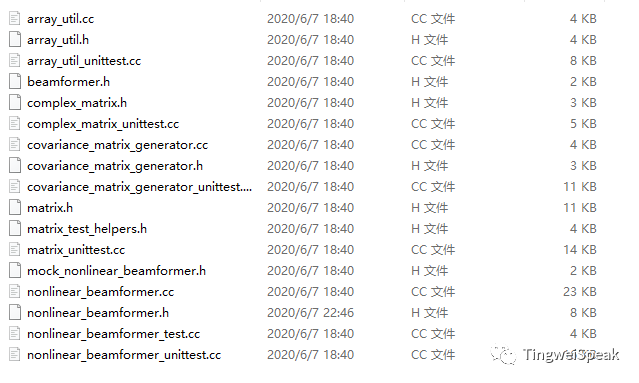

webrtc是一个极其庞大的项目,里面的文件包含特别复杂。正是因为如此,对还是小白的我造成了极大的困难。刚开始打算采取本方法,将波束模块里的nonlinear_beamformer_test.cc当做主文件,然后编译这个,差啥文件就往vs工程里面补,这样搞了一段时间后,便发现这种方式实在是太过愚蠢。因此有可能为了编译一个波束模块,把整个webrtc的代码都弄过来。后来接触到了cmake,才发现了这个工具的神奇。在webrtcmodulesaudio_processingbeamformer目录下找到了波束模块的关键代码,其中nonlinear_beamformer_test.cc就是波束模块的测试代码。

里面的代码如下:

/*

* Copyright (c) 2014 The WebRTC project authors. All Rights Reserved.

*

* Use of this source code is governed by a BSD-style license

* that can be found in the LICENSE file in the root of the source

* tree. An additional intellectual property rights grant can be found

* in the file PATENTS. All contributing project authors may

* be found in the AUTHORS file in the root of the source tree.

*/

#include <vector>

#include "gflags/gflags.h"

#include "webrtc/base/checks.h"

#include "webrtc/base/format_macros.h"

#include "webrtc/common_audio/channel_buffer.h"

#include "webrtc/common_audio/wav_file.h"

#include "webrtc/modules/audio_processing/beamformer/nonlinear_beamformer.h"

#include "webrtc/modules/audio_processing/test/test_utils.h"

DEFINE_string(i, "", "The name of the input file to read from.");

DEFINE_string(o, "out.wav", "Name of the output file to write to.");

DEFINE_string(mic_positions, "",

"Space delimited cartesian coordinates of microphones in meters. "

"The coordinates of each point are contiguous. "

"For a two element array: "x1 y1 z1 x2 y2 z2"");

namespace webrtc {

namespace {

const int kChunksPerSecond = 100;

const int kChunkSizeMs = 1000 / kChunksPerSecond;

const char kUsage[] =

"Command-line tool to run beamforming on WAV files. The signal is passedn"

"in as a single band, unlike the audio processing interface which splitsn"

"signals into multiple bands.";

} // namespace

int main(int argc, char* argv[]) {

google::SetUsageMessage(kUsage);

google::ParseCommandLineFlags(&argc, &argv, true);

WavReader in_file(FLAGS_i);

WavWriter out_file(FLAGS_o, in_file.sample_rate(), 1);

const size_t num_mics = in_file.num_channels();

const std::vector<Point> array_geometry =

ParseArrayGeometry(FLAGS_mic_positions, num_mics);

RTC_CHECK_EQ(array_geometry.size(), num_mics);

NonlinearBeamformer bf(array_geometry);

bf.Initialize(kChunkSizeMs, in_file.sample_rate());

printf("Input file: %snChannels: %" PRIuS ", Sample rate: %d Hznn",

FLAGS_i.c_str(), in_file.num_channels(), in_file.sample_rate());

printf("Output file: %snChannels: %" PRIuS ", Sample rate: %d Hznn",

FLAGS_o.c_str(), out_file.num_channels(), out_file.sample_rate());

ChannelBuffer<float> in_buf(

rtc::CheckedDivExact(in_file.sample_rate(), kChunksPerSecond),

in_file.num_channels());

ChannelBuffer<float> out_buf(

rtc::CheckedDivExact(out_file.sample_rate(), kChunksPerSecond),

out_file.num_channels());

std::vector<float> interleaved(in_buf.size());

while (in_file.ReadSamples(interleaved.size(),

&interleaved[0]) == interleaved.size()) {

FloatS16ToFloat(&interleaved[0], interleaved.size(), &interleaved[0]);

Deinterleave(&interleaved[0], in_buf.num_frames(),

in_buf.num_channels(), in_buf.channels());

bf.ProcessChunk(in_buf, &out_buf);

Interleave(out_buf.channels(), out_buf.num_frames(),

out_buf.num_channels(), &interleaved[0]);

FloatToFloatS16(&interleaved[0], interleaved.size(), &interleaved[0]);

out_file.WriteSamples(&interleaved[0], interleaved.size());

}

return 0;

}

} // namespace webrtc

int main(int argc, char* argv[]) {

return webrtc::main(argc, argv);

}

根据这个测试文件,大概所需要的关键部分有:解析控制台输入的gflags;读写wav文件的WavReader,WavWriter这两个类都在webrtccommon_audio目录下的wav_file.h中;以及波束类NonlinearBeamformer,它的相关实现在nonlinear_beamformer.cc以及nonlinear_beamformer.h中,其实最核心的代码就是:

bf.ProcessChunk(in_buf, &out_buf)

要想弄明白webrtc的波束算法原理,那么就得弄明白该函数的执行流程以及实现原理。接下来就开始编译波束模块代码了。

首先给出CMakeLists.txt文件.这里面的目录结构是我的机器上的,若在你的机器上编译,可以需要改变相应的目录结构

cmake_minimum_required(VERSION 2.8)

project(wav-beamforming)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11")

set(CMAKE_INSTALL_RPATH_USE_LINK_PATH TRUE)

add_subdirectory(gflags)

add_definitions("-DWEBRTC_LINUX -DWEBRTC_POSIX -DWEBRTC_NS_FLOAT")

#-DWEBRTC_UNTRUSTED_DELAY

include_directories(

webrtc

webrtc/webrtc/common_audio/signal_processing/include

webrtc/webrtc/modules/audio_coding/codecs/isac/main/include

webrtc/webrtc/modules/audio_processing/test/

)

set(WEBRTC_SRC_

base/buffer.cc

base/checks.cc

base/criticalsection.cc

base/event.cc

base/event_tracer.cc

base/logging.cc

base/platform_file.cc

base/platform_thread.cc

base/stringencode.cc

base/thread_checker_impl.cc

base/timeutils.cc

common_audio/audio_converter.cc

common_audio/audio_ring_buffer.cc

common_audio/audio_util.cc

common_audio/blocker.cc

common_audio/channel_buffer.cc

common_audio/fft4g.c

common_audio/fir_filter.cc

common_audio/fir_filter_sse.cc

common_audio/lapped_transform.cc

common_audio/real_fourier.cc

common_audio/real_fourier_ooura.cc

common_audio/resampler/push_resampler.cc

common_audio/resampler/push_sinc_resampler.cc

common_audio/resampler/resampler.cc

common_audio/resampler/sinc_resampler.cc

common_audio/resampler/sinc_resampler_sse.cc

common_audio/resampler/sinusoidal_linear_chirp_source.cc

common_audio/ring_buffer.c

common_audio/signal_processing/auto_correlation.c

common_audio/signal_processing/auto_corr_to_refl_coef.c

common_audio/signal_processing/complex_bit_reverse.c

common_audio/signal_processing/complex_fft.c

common_audio/signal_processing/copy_set_operations.c

common_audio/signal_processing/cross_correlation.c

common_audio/signal_processing/division_operations.c

common_audio/signal_processing/dot_product_with_scale.c

common_audio/signal_processing/downsample_fast.c

common_audio/signal_processing/energy.c

common_audio/signal_processing/filter_ar.c

common_audio/signal_processing/filter_ar_fast_q12.c

common_audio/signal_processing/filter_ma_fast_q12.c

common_audio/signal_processing/get_hanning_window.c

common_audio/signal_processing/get_scaling_square.c

common_audio/signal_processing/ilbc_specific_functions.c

common_audio/signal_processing/levinson_durbin.c

common_audio/signal_processing/lpc_to_refl_coef.c

common_audio/signal_processing/min_max_operations.c

common_audio/signal_processing/randomization_functions.c

common_audio/signal_processing/real_fft.c

common_audio/signal_processing/refl_coef_to_lpc.c

common_audio/signal_processing/resample_48khz.c

common_audio/signal_processing/resample_by_2.c

common_audio/signal_processing/resample_by_2_internal.c

common_audio/signal_processing/resample.c

common_audio/signal_processing/resample_fractional.c

common_audio/signal_processing/spl_init.c

common_audio/signal_processing/splitting_filter.c

common_audio/signal_processing/spl_sqrt.c

common_audio/signal_processing/spl_sqrt_floor.c

common_audio/signal_processing/sqrt_of_one_minus_x_squared.c

common_audio/signal_processing/vector_scaling_operations.c

common_audio/sparse_fir_filter.cc

common_audio/vad/vad_core.c

common_audio/vad/vad_filterbank.c

common_audio/vad/vad_gmm.c

common_audio/vad/vad_sp.c

common_audio/vad/webrtc_vad.c

common_audio/wav_file.cc

common_audio/wav_header.cc

common_audio/window_generator.cc

common_types.cc

modules/audio_coding/codecs/audio_decoder.cc

modules/audio_coding/codecs/audio_encoder.cc

modules/audio_coding/codecs/isac/locked_bandwidth_info.cc

modules/audio_coding/codecs/isac/main/source/arith_routines.c

modules/audio_coding/codecs/isac/main/source/arith_routines_hist.c

modules/audio_coding/codecs/isac/main/source/arith_routines_logist.c

modules/audio_coding/codecs/isac/main/source/audio_decoder_isac.cc

modules/audio_coding/codecs/isac/main/source/audio_encoder_isac.cc

modules/audio_coding/codecs/isac/main/source/bandwidth_estimator.c

modules/audio_coding/codecs/isac/main/source/crc.c

modules/audio_coding/codecs/isac/main/source/decode_bwe.c

modules/audio_coding/codecs/isac/main/source/decode.c

modules/audio_coding/codecs/isac/main/source/encode.c

modules/audio_coding/codecs/isac/main/source/encode_lpc_swb.c

modules/audio_coding/codecs/isac/main/source/entropy_coding.c

modules/audio_coding/codecs/isac/main/source/fft.c

modules/audio_coding/codecs/isac/main/source/filterbanks.c

modules/audio_coding/codecs/isac/main/source/filterbank_tables.c

modules/audio_coding/codecs/isac/main/source/filter_functions.c

modules/audio_coding/codecs/isac/main/source/intialize.c

modules/audio_coding/codecs/isac/main/source/isac.c

modules/audio_coding/codecs/isac/main/source/lattice.c

modules/audio_coding/codecs/isac/main/source/lpc_analysis.c

modules/audio_coding/codecs/isac/main/source/lpc_gain_swb_tables.c

modules/audio_coding/codecs/isac/main/source/lpc_shape_swb12_tables.c

modules/audio_coding/codecs/isac/main/source/lpc_shape_swb16_tables.c

modules/audio_coding/codecs/isac/main/source/lpc_tables.c

modules/audio_coding/codecs/isac/main/source/pitch_estimator.c

modules/audio_coding/codecs/isac/main/source/pitch_filter.c

modules/audio_coding/codecs/isac/main/source/pitch_gain_tables.c

modules/audio_coding/codecs/isac/main/source/pitch_lag_tables.c

modules/audio_coding/codecs/isac/main/source/spectrum_ar_model_tables.c

modules/audio_coding/codecs/isac/main/source/transform.c

modules/audio_processing/aec/aec_core.cc

modules/audio_processing/aec/aec_core_sse2.cc

modules/audio_processing/aec/aec_rdft.cc

modules/audio_processing/aec/aec_rdft_sse2.cc

modules/audio_processing/aec/aec_resampler.cc

modules/audio_processing/aec/echo_cancellation.cc

modules/audio_processing/aecm/aecm_core.cc

modules/audio_processing/aecm/aecm_core_c.cc

modules/audio_processing/aecm/echo_control_mobile.cc

modules/audio_processing/agc/agc.cc

modules/audio_processing/agc/agc_manager_direct.cc

modules/audio_processing/agc/histogram.cc

modules/audio_processing/agc/legacy/analog_agc.c

modules/audio_processing/agc/legacy/digital_agc.c

modules/audio_processing/agc/utility.cc

modules/audio_processing/audio_buffer.cc

modules/audio_processing/audio_processing_impl.cc

modules/audio_processing/beamformer/array_util.cc

modules/audio_processing/beamformer/covariance_matrix_generator.cc

modules/audio_processing/beamformer/nonlinear_beamformer.cc

modules/audio_processing/echo_cancellation_impl.cc

modules/audio_processing/echo_control_mobile_impl.cc

modules/audio_processing/gain_control_for_experimental_agc.cc

modules/audio_processing/gain_control_impl.cc

modules/audio_processing/high_pass_filter_impl.cc

modules/audio_processing/intelligibility/intelligibility_enhancer.cc

modules/audio_processing/intelligibility/intelligibility_utils.cc

modules/audio_processing/level_estimator_impl.cc

modules/audio_processing/logging/aec_logging_file_handling.cc

modules/audio_processing/noise_suppression_impl.cc

modules/audio_processing/ns/noise_suppression.c

modules/audio_processing/ns/ns_core.c

modules/audio_processing/rms_level.cc

modules/audio_processing/splitting_filter.cc

modules/audio_processing/three_band_filter_bank.cc

modules/audio_processing/transient/file_utils.cc

modules/audio_processing/transient/moving_moments.cc

modules/audio_processing/transient/transient_detector.cc

modules/audio_processing/transient/transient_suppressor.cc

modules/audio_processing/transient/wpd_node.cc

modules/audio_processing/transient/wpd_tree.cc

modules/audio_processing/typing_detection.cc

modules/audio_processing/utility/block_mean_calculator.cc

modules/audio_processing/utility/delay_estimator.cc

modules/audio_processing/utility/delay_estimator_wrapper.cc

modules/audio_processing/vad/gmm.cc

modules/audio_processing/vad/pitch_based_vad.cc

modules/audio_processing/vad/pitch_internal.cc

modules/audio_processing/vad/pole_zero_filter.cc

modules/audio_processing/vad/standalone_vad.cc

modules/audio_processing/vad/vad_audio_proc.cc

modules/audio_processing/vad/vad_circular_buffer.cc

modules/audio_processing/vad/voice_activity_detector.cc

modules/audio_processing/voice_detection_impl.cc

modules/audio_processing/test/test_utils.cc

system_wrappers/source/aligned_malloc.cc

system_wrappers/source/cpu_features.cc

system_wrappers/source/file_impl.cc

system_wrappers/source/logging.cc

system_wrappers/source/metrics_default.cc

system_wrappers/source/rw_lock.cc

system_wrappers/source/rw_lock_posix.cc

system_wrappers/source/trace_impl.cc

system_wrappers/source/trace_posix.cc

)

function(prepend_path var prefix)

set(listVar "")

foreach(f ${ARGN})

list(APPEND listVar "${prefix}/${f}")

endforeach(f)

set(${var} "${listVar}" PARENT_SCOPE)

endfunction(prepend_path)

prepend_path(WEBRTC_SRC webrtc/webrtc ${WEBRTC_SRC_})

add_executable(webrtc-bf nonlinear_beamformer_test.cc ${WEBRTC_SRC})

target_link_libraries(webrtc-bf gflags pthread)

SET(CMAKE_CXX_FLAGS_DEBUG "$ENV{CXXFLAGS} -O0 -Wall -g -ggdb")

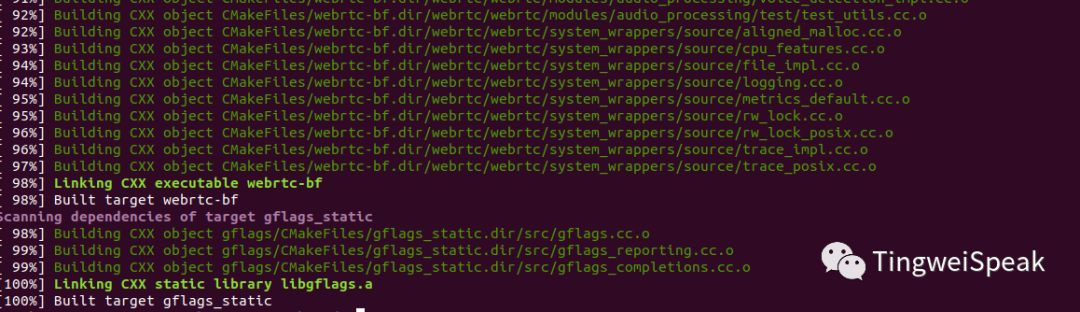

SET(CMAKE_CXX_FLAGS_RELEASE "$ENV{CXXFLAGS} -O3 -Wall")为了省事,将相关的文件全部加上了。然后在ubuntu下编译。首先新建build,然后在build目录里执行cmake ..以及make命令:

mkdir build

cmake ..

makemake成功后出现

此时在build目录下生成了可执行文件

然后使用如下命令,

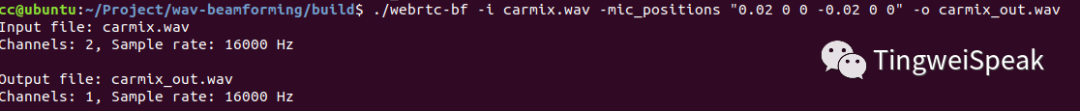

./webrtc-bf -i input.wav -mic_positions "x1 y1 z1 x2 y2 z2" -o output.wav

如果输入的是两通道的语音,那么-mic_positions后面的坐标就是两个麦克风的坐标,多通道的话,以此类推。因为carmix.wav是一个双通道的数据,所以将麦克风的坐标设置为0.02 0 0 -0.02 0 0,坐标原点就是两个麦克风的中心位置。

这样就运行成功了。相关的文件已经上传到github了:https://github.com/ctwgL/webrtc-beamforming

本文就主要介绍了webrtc里的波束模块编译过程,具体的代码细节,技术原理以及处理的效果将会在下篇文章中介绍。

作者:TingweiSpeak 来源:公众号——音频探险记,转载请注明

版权声明:本文内容转自互联网,本文观点仅代表作者本人。本站仅提供信息存储空间服务,所有权归原作者所有。如发现本站有涉嫌抄袭侵权/违法违规的内容, 请发送邮件至1393616908@qq.com 举报,一经查实,本站将立刻删除。