不像H264,WebRTC已经在内部处理好了相关逻辑,我们只需要稍作修改即可实现H264编解码(硬编只需指定sdp,软编只需打开开关。。如要实现H265,不仅要增加接口,还需要添加封解RTP包的逻辑,本文基于M86。

开启H265编解码

打开支持

- 增加sdp支持:

@Override

public VideoCodecInfo[] getSupportedCodecs() {

...

for (VideoCodecMimeType type : new VideoCodecMimeType[] {

VideoCodecMimeType.VP8, VideoCodecMimeType.VP9, VideoCodecMimeType.H264,

VideoCodecMimeType.H265})

...

return supportedCodecInfos.toArray(new VideoCodecInfo[supportedCodecInfos.size()]);

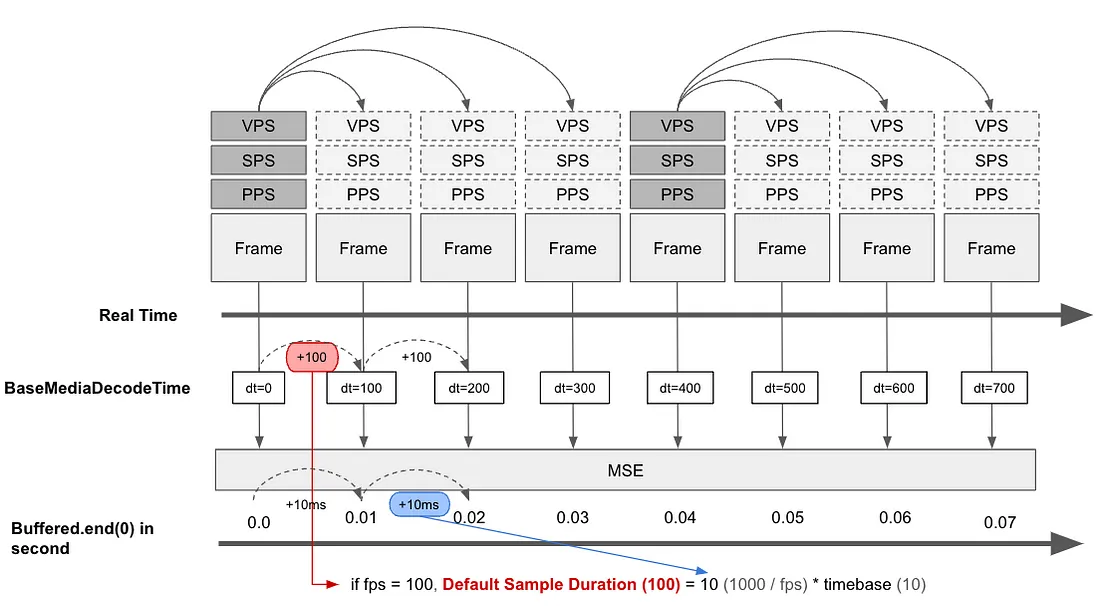

}- 增加sps/pps/vps支持:

protected void deliverEncodedImage() {

...

final ByteBuffer frameBuffer;

if (isKeyFrame && (codecType == VideoCodecMimeType.H264

|| codecType == VideoCodecMimeType.H265))

}封包支持

- 增加解包器初始化入口

...

#ifndef DISABLE_H265

#include "modules/rtp_rtcp/source/video_rtp_depacketizer_h265.h"

#endif

...

#ifndef DISABLE_H265

case kVideoCodecH265:

return std::make_unique<VideoRtpDepacketizerH265>();

#endif

...- foramt基类增加H265支持

...

#ifndef DISABLE_H265

#include "modules/rtp_rtcp/source/rtp_format_h265.h"

#endif

...

#ifndef DISABLE_H265

#include "modules/video_coding/codecs/h265/include/h265_globals.h"

#endif

...

std::unique_ptr<RtpPacketizer> RtpPacketizer::Create(

absl::optional<VideoCodecType> type,

rtc::ArrayView<const uint8_t> payload,

PayloadSizeLimits limits,

// Codec-specific details.

const RTPVideoHeader& rtp_video_header) {

if (!type) {

// Use raw packetizer.

return std::make_unique<RtpPacketizerGeneric>(payload, limits);

}

switch (*type) {

case kVideoCodecH264: {

const auto& h264 =

absl::get<RTPVideoHeaderH264>(rtp_video_header.video_type_header);

return std::make_unique<RtpPacketizerH264>(payload, limits,

h264.packetization_mode);

}

#ifndef DISABLE_H265

case kVideoCodecH265: {

const auto& h265 =

absl::get<RTPVideoHeaderH265>(rtp_video_header.video_type_header);

return absl::make_unique<RtpPacketizerH265>(

payload, limits, h265.packetization_mode);

}

#endif

...

}

}- 新增H265 format实现类rtp_format_h265:

#include <string.h>

#include "absl/types/optional.h"

#include "absl/types/variant.h"

#include "common_video/h264/h264_common.h"

#include "common_video/h265/h265_common.h"

#include "common_video/h265/h265_pps_parser.h"

#include "common_video/h265/h265_sps_parser.h"

#include "common_video/h265/h265_vps_parser.h"

#include "modules/include/module_common_types.h"

#include "modules/rtp_rtcp/source/byte_io.h"

#include "modules/rtp_rtcp/source/rtp_format_h265.h"

#include "modules/rtp_rtcp/source/rtp_packet_to_send.h"

#include "rtc_base/logging.h"

using namespace rtc;

namespace webrtc {

namespace {

enum NaluType {

kTrailN = 0,

kTrailR = 1,

kTsaN = 2,

kTsaR = 3,

kStsaN = 4,

kStsaR = 5,

kRadlN = 6,

kRadlR = 7,

kBlaWLp = 16,

kBlaWRadl = 17,

kBlaNLp = 18,

kIdrWRadl = 19,

kIdrNLp = 20,

kCra = 21,

kVps = 32,

kHevcSps = 33,

kHevcPps = 34,

kHevcAud = 35,

kPrefixSei = 39,

kSuffixSei = 40,

kHevcAp = 48,

kHevcFu = 49

};

/*

0 1 2 3

0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| PayloadHdr (Type=49) | FU header | DONL (cond) |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-|

*/

// Unlike H.264, HEVC NAL header is 2-bytes.

static const size_t kHevcNalHeaderSize = 2;

// H.265's FU is constructed of 2-byte payload header, and 1-byte FU header

static const size_t kHevcFuHeaderSize = 1;

static const size_t kHevcLengthFieldSize = 2;

enum HevcNalHdrMasks {

kHevcFBit = 0x80,

kHevcTypeMask = 0x7E,

kHevcLayerIDHMask = 0x1,

kHevcLayerIDLMask = 0xF8,

kHevcTIDMask = 0x7,

kHevcTypeMaskN = 0x81,

kHevcTypeMaskInFuHeader = 0x3F

};

// Bit masks for FU headers.

enum HevcFuDefs { kHevcSBit = 0x80, kHevcEBit = 0x40, kHevcFuTypeBit = 0x3F };

} // namespace

RtpPacketizerH265::RtpPacketizerH265(

rtc::ArrayView<const uint8_t> payload,

PayloadSizeLimits limits,

H265PacketizationMode packetization_mode)

: limits_(limits),

num_packets_left_(0) {

// Guard against uninitialized memory in packetization_mode.

RTC_CHECK(packetization_mode == H265PacketizationMode::NonInterleaved ||

packetization_mode == H265PacketizationMode::SingleNalUnit);

for (const auto& nalu :

H264::FindNaluIndices(payload.data(), payload.size())) {

input_fragments_.push_back(

payload.subview(nalu.payload_start_offset, nalu.payload_size));

}

if (!GeneratePackets(packetization_mode)) {

// If failed to generate all the packets, discard already generated

// packets in case the caller would ignore return value and still try to

// call NextPacket().

num_packets_left_ = 0;

while (!packets_.empty()) {

packets_.pop();

}

}

}

RtpPacketizerH265::~RtpPacketizerH265() {}

size_t RtpPacketizerH265::NumPackets() const {

return num_packets_left_;

}

bool RtpPacketizerH265::GeneratePackets(

H265PacketizationMode packetization_mode) {

// For HEVC we follow non-interleaved mode for the packetization,

// and don't support single-nalu mode at present.

for (size_t i = 0; i < input_fragments_.size();) {

int fragment_len = input_fragments_[i].size();

int single_packet_capacity = limits_.max_payload_len;

if (input_fragments_.size() == 1)

single_packet_capacity -= limits_.single_packet_reduction_len;

else if (i == 0)

single_packet_capacity -= limits_.first_packet_reduction_len;

else if (i + 1 == input_fragments_.size()) {

// Pretend that last fragment is larger instead of making last packet

// smaller.

single_packet_capacity -= limits_.last_packet_reduction_len;

}

if (fragment_len > single_packet_capacity) {

PacketizeFu(i);

++i;

} else {

PacketizeSingleNalu(i);

++i;

}

}

return true;

}

bool RtpPacketizerH265::PacketizeFu(size_t fragment_index) {

// Fragment payload into packets (FU).

// Strip out the original header and leave room for the FU header.

rtc::ArrayView<const uint8_t> fragment = input_fragments_[fragment_index];

PayloadSizeLimits limits = limits_;

limits.max_payload_len -= kHevcFuHeaderSize + kHevcNalHeaderSize;

// Update single/first/last packet reductions unless it is single/first/last

// fragment.

if (input_fragments_.size() != 1) {

// if this fragment is put into a single packet, it might still be the

// first or the last packet in the whole sequence of packets.

if (fragment_index == input_fragments_.size() - 1) {

limits.single_packet_reduction_len = limits_.last_packet_reduction_len;

} else if (fragment_index == 0) {

limits.single_packet_reduction_len = limits_.first_packet_reduction_len;

} else {

limits.single_packet_reduction_len = 0;

}

}

if (fragment_index != 0)

limits.first_packet_reduction_len = 0;

if (fragment_index != input_fragments_.size() - 1)

limits.last_packet_reduction_len = 0;

// Strip out the original header.

size_t payload_left = fragment.size() - kHevcNalHeaderSize;

int offset = kHevcNalHeaderSize;

std::vector<int> payload_sizes = SplitAboutEqually(payload_left, limits);

if (payload_sizes.empty())

return false;

for (size_t i = 0; i < payload_sizes.size(); ++i) {

int packet_length = payload_sizes[i];

RTC_CHECK_GT(packet_length, 0);

uint16_t header = (fragment[0] << 8) | fragment[1];

packets_.push(PacketUnit(fragment.subview(offset, packet_length),

/*first_fragment=*/i == 0,

/*last_fragment=*/i == payload_sizes.size() - 1,

false, header));

offset += packet_length;

payload_left -= packet_length;

}

num_packets_left_ += payload_sizes.size();

RTC_CHECK_EQ(0, payload_left);

return true;

}

bool RtpPacketizerH265::PacketizeSingleNalu(size_t fragment_index) {

// Add a single NALU to the queue, no aggregation.

size_t payload_size_left = limits_.max_payload_len;

if (input_fragments_.size() == 1)

payload_size_left -= limits_.single_packet_reduction_len;

else if (fragment_index == 0)

payload_size_left -= limits_.first_packet_reduction_len;

else if (fragment_index + 1 == input_fragments_.size())

payload_size_left -= limits_.last_packet_reduction_len;

rtc::ArrayView<const uint8_t> fragment = input_fragments_[fragment_index];

if (payload_size_left < fragment.size()) {

RTC_LOG(LS_ERROR) << "Failed to fit a fragment to packet in SingleNalu "

"packetization mode. Payload size left "

<< payload_size_left << ", fragment length "

<< fragment.size() << ", packet capacity "

<< limits_.max_payload_len;

return false;

}

RTC_CHECK_GT(fragment.size(), 0u);

packets_.push(PacketUnit(fragment, true /* first */, true /* last */,

false /* aggregated */, fragment[0]));

++num_packets_left_;

return true;

}

int RtpPacketizerH265::PacketizeAp(size_t fragment_index) {

// Aggregate fragments into one packet (STAP-A).

size_t payload_size_left = limits_.max_payload_len;

if (input_fragments_.size() == 1)

payload_size_left -= limits_.single_packet_reduction_len;

else if (fragment_index == 0)

payload_size_left -= limits_.first_packet_reduction_len;

int aggregated_fragments = 0;

size_t fragment_headers_length = 0;

rtc::ArrayView<const uint8_t> fragment = input_fragments_[fragment_index];

RTC_CHECK_GE(payload_size_left, fragment.size());

++num_packets_left_;

auto payload_size_needed = [&] {

size_t fragment_size = fragment.size() + fragment_headers_length;

if (input_fragments_.size() == 1) {

// Single fragment, single packet, payload_size_left already adjusted

// with limits_.single_packet_reduction_len.

return fragment_size;

}

if (fragment_index == input_fragments_.size() - 1) {

// Last fragment, so StrapA might be the last packet.

return fragment_size + limits_.last_packet_reduction_len;

}

return fragment_size;

};

while (payload_size_left >= payload_size_needed()) {

RTC_CHECK_GT(fragment.size(), 0);

packets_.push(PacketUnit(fragment, aggregated_fragments == 0, false, true,

fragment[0]));

payload_size_left -= fragment.size();

payload_size_left -= fragment_headers_length;

fragment_headers_length = kHevcLengthFieldSize;

// If we are going to try to aggregate more fragments into this packet

// we need to add the STAP-A NALU header and a length field for the first

// NALU of this packet.

if (aggregated_fragments == 0)

fragment_headers_length += kHevcNalHeaderSize + kHevcLengthFieldSize;

++aggregated_fragments;

// Next fragment.

++fragment_index;

if (fragment_index == input_fragments_.size())

break;

fragment = input_fragments_[fragment_index];

}

RTC_CHECK_GT(aggregated_fragments, 0);

packets_.back().last_fragment = true;

return fragment_index;

}

bool RtpPacketizerH265::NextPacket(RtpPacketToSend* rtp_packet) {

RTC_DCHECK(rtp_packet);

if (packets_.empty()) {

return false;

}

PacketUnit packet = packets_.front();

if (packet.first_fragment && packet.last_fragment) {

// Single NAL unit packet.

size_t bytes_to_send = packet.source_fragment.size();

uint8_t* buffer = rtp_packet->AllocatePayload(bytes_to_send);

memcpy(buffer, packet.source_fragment.data(), bytes_to_send);

packets_.pop();

input_fragments_.pop_front();

} else if (packet.aggregated) {

bool is_last_packet = num_packets_left_ == 1;

NextAggregatePacket(rtp_packet, is_last_packet);

} else {

NextFragmentPacket(rtp_packet);

}

rtp_packet->SetMarker(packets_.empty());

--num_packets_left_;

return true;

}

void RtpPacketizerH265::NextAggregatePacket(RtpPacketToSend* rtp_packet,

bool last) {

size_t payload_capacity = rtp_packet->FreeCapacity();

RTC_CHECK_GE(payload_capacity, kHevcNalHeaderSize);

uint8_t* buffer = rtp_packet->AllocatePayload(payload_capacity);

RTC_CHECK(buffer);

PacketUnit* packet = &packets_.front();

RTC_CHECK(packet->first_fragment);

uint8_t payload_hdr_h = packet->header >> 8;

uint8_t payload_hdr_l = packet->header & 0xFF;

uint8_t layer_id_h = payload_hdr_h & kHevcLayerIDHMask;

payload_hdr_h =

(payload_hdr_h & kHevcTypeMaskN) | (kHevcAp << 1) | layer_id_h;

buffer[0] = payload_hdr_h;

buffer[1] = payload_hdr_l;

int index = kHevcNalHeaderSize;

bool is_last_fragment = packet->last_fragment;

while (packet->aggregated) {

// Add NAL unit length field.

rtc::ArrayView<const uint8_t> fragment = packet->source_fragment;

ByteWriter<uint16_t>::WriteBigEndian(&buffer[index], fragment.size());

index += kHevcLengthFieldSize;

// Add NAL unit.

memcpy(&buffer[index], fragment.data(), fragment.size());

index += fragment.size();

packets_.pop();

input_fragments_.pop_front();

if (is_last_fragment)

break;

packet = &packets_.front();

is_last_fragment = packet->last_fragment;

}

RTC_CHECK(is_last_fragment);

rtp_packet->SetPayloadSize(index);

}

void RtpPacketizerH265::NextFragmentPacket(RtpPacketToSend* rtp_packet) {

PacketUnit* packet = &packets_.front();

// NAL unit fragmented over multiple packets (FU).

// We do not send original NALU header, so it will be replaced by the

// PayloadHdr of the first packet.

uint8_t payload_hdr_h =

packet->header >> 8; // 1-bit F, 6-bit type, 1-bit layerID highest-bit

uint8_t payload_hdr_l = packet->header & 0xFF;

uint8_t layer_id_h = payload_hdr_h & kHevcLayerIDHMask;

uint8_t fu_header = 0;

// S | E |6 bit type.

fu_header |= (packet->first_fragment ? kHevcSBit : 0);

fu_header |= (packet->last_fragment ? kHevcEBit : 0);

uint8_t type = (payload_hdr_h & kHevcTypeMask) >> 1;

fu_header |= type;

// Now update payload_hdr_h with FU type.

payload_hdr_h =

(payload_hdr_h & kHevcTypeMaskN) | (kHevcFu << 1) | layer_id_h;

rtc::ArrayView<const uint8_t> fragment = packet->source_fragment;

uint8_t* buffer = rtp_packet->AllocatePayload(

kHevcFuHeaderSize + kHevcNalHeaderSize + fragment.size());

RTC_CHECK(buffer);

buffer[0] = payload_hdr_h;

buffer[1] = payload_hdr_l;

buffer[2] = fu_header;

if (packet->last_fragment) {

memcpy(buffer + kHevcFuHeaderSize + kHevcNalHeaderSize, fragment.data(),

fragment.size());

} else {

memcpy(buffer + kHevcFuHeaderSize + kHevcNalHeaderSize, fragment.data(),

fragment.size());

}

packets_.pop();

}

} // namespace webrtc

复制代码#ifndef WEBRTC_MODULES_RTP_RTCP_SOURCE_RTP_FORMAT_H265_H_

#define WEBRTC_MODULES_RTP_RTCP_SOURCE_RTP_FORMAT_H265_H_

#include <memory>

#include <queue>

#include <string>

#include "api/array_view.h"

#include "modules/include/module_common_types.h"

#include "modules/rtp_rtcp/source/rtp_format.h"

#include "modules/rtp_rtcp/source/rtp_packet_to_send.h"

#include "modules/rtp_rtcp/source/rtp_format.h"

#include "modules/video_coding/codecs/h265/include/h265_globals.h"

#include "rtc_base/buffer.h"

#include "rtc_base/constructor_magic.h"

namespace webrtc {

class RtpPacketizerH265 : public RtpPacketizer {

public:

// Initialize with payload from encoder.

// The payload_data must be exactly one encoded H.265 frame.

RtpPacketizerH265(rtc::ArrayView<const uint8_t> payload,

PayloadSizeLimits limits,

H265PacketizationMode packetization_mode);

~RtpPacketizerH265() override;

size_t NumPackets() const override;

// Get the next payload with H.265 payload header.

// buffer is a pointer to where the output will be written.

// bytes_to_send is an output variable that will contain number of bytes

// written to buffer. The parameter last_packet is true for the last packet of

// the frame, false otherwise (i.e., call the function again to get the

// next packet).

// Returns true on success or false if there was no payload to packetize.

bool NextPacket(RtpPacketToSend* rtp_packet) override;

private:

struct Packet {

Packet(size_t offset,

size_t size,

bool first_fragment,

bool last_fragment,

bool aggregated,

uint16_t header)

: offset(offset),

size(size),

first_fragment(first_fragment),

last_fragment(last_fragment),

aggregated(aggregated),

header(header) {}

size_t offset;

size_t size;

bool first_fragment;

bool last_fragment;

bool aggregated;

uint16_t header; // Different from H264

};

struct PacketUnit {

PacketUnit(rtc::ArrayView<const uint8_t> source_fragment,

bool first_fragment,

bool last_fragment,

bool aggregated,

uint16_t header)

: source_fragment(source_fragment),

first_fragment(first_fragment),

last_fragment(last_fragment),

aggregated(aggregated),

header(header) {}

rtc::ArrayView<const uint8_t> source_fragment;

bool first_fragment;

bool last_fragment;

bool aggregated;

uint16_t header;

};

typedef std::queue<Packet> PacketQueue;

std::deque<rtc::ArrayView<const uint8_t>> input_fragments_;

std::queue<PacketUnit> packets_;

bool GeneratePackets(H265PacketizationMode packetization_mode);

bool PacketizeFu(size_t fragment_index);

int PacketizeAp(size_t fragment_index);

bool PacketizeSingleNalu(size_t fragment_index);

void NextAggregatePacket(RtpPacketToSend* rtp_packet, bool last);

void NextFragmentPacket(RtpPacketToSend* rtp_packet);

const PayloadSizeLimits limits_;

size_t num_packets_left_;

RTC_DISALLOW_COPY_AND_ASSIGN(RtpPacketizerH265);

};

} // namespace webrtc

#endif // WEBRTC_MODULES_RTP_RTCP_SOURCE_RTP_FORMAT_H265_H_- 视频渲染支持,vpx通过picture_id,temporal_id,tl0_pic_id标识Nalu间的关系及是否可连续解码,H26x通过seqnum,是否有sps,pps来判断帧间的解码连续性。故,我们把H265的temporalId置为kNoTemporalIdx即可:

uint8_t RTPSenderVideo::GetTemporalId(const RTPVideoHeader& header) {

struct TemporalIdGetter {

uint8_t operator()(const RTPVideoHeaderVP8& vp8) { return vp8.temporalIdx; }

uint8_t operator()(const RTPVideoHeaderVP9& vp9) {

return vp9.temporal_idx;

}

uint8_t operator()(const RTPVideoHeaderH264&) { return kNoTemporalIdx; }

#ifndef DISABLE_H265

uint8_t operator()(const RTPVideoHeaderH265&) { return kNoTemporalIdx; }

#endif

...

return absl::visit(TemporalIdGetter(), header.video_type_header);

}- RTP头增加H265支持构造函数,注意#ifndef与#ifdef的区别:

#include "modules/video_coding/codecs/h264/include/h264_globals.h"

#ifndef DISABLE_H265

#include "modules/video_coding/codecs/h265/include/h265_globals.h"

#endif

#include "modules/video_coding/codecs/vp8/include/vp8_globals.h"

...

#ifdef DISABLE_H265

using RTPVideoTypeHeader = absl::variant<absl::monostate,

RTPVideoHeaderVP8,

RTPVideoHeaderVP9,

RTPVideoHeaderH264,

RTPVideoHeaderLegacyGeneric>;

#else

using RTPVideoTypeHeader = absl::variant<absl::monostate,

RTPVideoHeaderVP8,

RTPVideoHeaderVP9,

RTPVideoHeaderH264,

RTPVideoHeaderH265,

RTPVideoHeaderLegacyGeneric>;

#endif- 新增H265解包逻辑video_rtp_depacketizer_h265:

#ifndef MODULES_RTP_RTCP_SOURCE_VIDEO_RTP_DEPACKETIZER_H265_H_

#define MODULES_RTP_RTCP_SOURCE_VIDEO_RTP_DEPACKETIZER_H265_H_

#include "absl/types/optional.h"

#include "modules/rtp_rtcp/source/video_rtp_depacketizer.h"

#include "rtc_base/copy_on_write_buffer.h"

namespace webrtc {

class VideoRtpDepacketizerH265 : public VideoRtpDepacketizer {

public:

~VideoRtpDepacketizerH265() override = default;

absl::optional<ParsedRtpPayload> Parse(

rtc::CopyOnWriteBuffer rtp_payload) override;

private:

struct ParsedPayload {

RTPVideoHeader& video_header() { return video; }

const RTPVideoHeader& video_header() const { return video; }

RTPVideoHeader video;

const uint8_t* payload;

size_t payload_length;

};

bool Parse(ParsedPayload* parsed_payload,

const uint8_t* payload_data,

size_t payload_data_length);

bool ParseFuNalu(ParsedPayload* parsed_payload,

const uint8_t* payload_data);

bool ProcessApOrSingleNalu(ParsedPayload* parsed_payload,

const uint8_t* payload_data);

size_t offset_;

size_t length_;

std::unique_ptr<rtc::Buffer> modified_buffer_;

};

} // namespace webrtc

#endif // MODULES_RTP_RTCP_SOURCE_VIDEO_RTP_DEPACKETIZER_H265_H_#include "modules/rtp_rtcp/source/video_rtp_depacketizer_h265.h"

#include <cstddef>

#include <cstdint>

#include <utility>

#include <vector>

#include "absl/base/macros.h"

#include "absl/types/optional.h"

#include "absl/types/variant.h"

#include "common_video/h264/h264_common.h"

#include "common_video/h265/h265_common.h"

#include "common_video/h265/h265_pps_parser.h"

#include "common_video/h265/h265_sps_parser.h"

#include "common_video/h265/h265_vps_parser.h"

#include "modules/rtp_rtcp/source/byte_io.h"

#include "modules/rtp_rtcp/source/video_rtp_depacketizer.h"

#include "rtc_base/checks.h"

#include "rtc_base/copy_on_write_buffer.h"

#include "rtc_base/logging.h"

namespace webrtc {

namespace {

/*

0 1 2 3

0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| PayloadHdr (Type=49) | FU header | DONL (cond) |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-|

*/

// Unlike H.264, HEVC NAL header is 2-bytes.

static const size_t kHevcNalHeaderSize = 2;

// H.265's FU is constructed of 2-byte payload header, and 1-byte FU header

static const size_t kHevcFuHeaderSize = 1;

static const size_t kHevcLengthFieldSize = 2;

static const size_t kHevcApHeaderSize =

kHevcNalHeaderSize + kHevcLengthFieldSize;

enum HevcNalHdrMasks {

kHevcFBit = 0x80,

kHevcTypeMask = 0x7E,

kHevcLayerIDHMask = 0x1,

kHevcLayerIDLMask = 0xF8,

kHevcTIDMask = 0x7,

kHevcTypeMaskN = 0x81,

kHevcTypeMaskInFuHeader = 0x3F

};

// Bit masks for FU headers.

enum HevcFuDefs { kHevcSBit = 0x80, kHevcEBit = 0x40, kHevcFuTypeBit = 0x3F };

// TODO(pbos): Avoid parsing this here as well as inside the jitter buffer.

bool ParseApStartOffsets(const uint8_t* nalu_ptr,

size_t length_remaining,

std::vector<size_t>* offsets) {

size_t offset = 0;

while (length_remaining > 0) {

// Buffer doesn't contain room for additional nalu length.

if (length_remaining < sizeof(uint16_t))

return false;

uint16_t nalu_size = ByteReader<uint16_t>::ReadBigEndian(nalu_ptr);

nalu_ptr += sizeof(uint16_t);

length_remaining -= sizeof(uint16_t);

if (nalu_size > length_remaining)

return false;

nalu_ptr += nalu_size;

length_remaining -= nalu_size;

offsets->push_back(offset + kHevcApHeaderSize);

offset += kHevcLengthFieldSize + nalu_size;

}

return true;

}

} // namespace

bool VideoRtpDepacketizerH265::Parse(ParsedPayload* parsed_payload,

const uint8_t* payload_data,

size_t payload_data_length) {

RTC_CHECK(parsed_payload != nullptr);

if (payload_data_length == 0) {

RTC_LOG(LS_ERROR) << "Empty payload.";

return false;

}

offset_ = 0;

length_ = payload_data_length;

modified_buffer_.reset();

uint8_t nal_type = (payload_data[0] & kHevcTypeMask) >> 1;

parsed_payload->video_header()

.video_type_header.emplace<RTPVideoHeaderH265>();

if (nal_type == H265::NaluType::kFU) {

// Fragmented NAL units (FU-A).

if (!ParseFuNalu(parsed_payload, payload_data))

return false;

} else {

// We handle STAP-A and single NALU's the same way here. The jitter buffer

// will depacketize the STAP-A into NAL units later.

// TODO(sprang): Parse STAP-A offsets here and store in fragmentation vec.

if (!ProcessApOrSingleNalu(parsed_payload, payload_data))

return false;

}

const uint8_t* payload =

modified_buffer_ ? modified_buffer_->data() : payload_data;

parsed_payload->payload = payload + offset_;

parsed_payload->payload_length = length_;

return true;

}

bool VideoRtpDepacketizerH265::ProcessApOrSingleNalu(

ParsedPayload* parsed_payload,

const uint8_t* payload_data) {

parsed_payload->video_header().width = 0;

parsed_payload->video_header().height = 0;

parsed_payload->video_header().codec = kVideoCodecH265;

parsed_payload->video_header().is_first_packet_in_frame = true;

auto& h265_header = absl::get<RTPVideoHeaderH265>(

parsed_payload->video_header().video_type_header);

const uint8_t* nalu_start = payload_data + kHevcNalHeaderSize;

const size_t nalu_length = length_ - kHevcNalHeaderSize;

uint8_t nal_type = (payload_data[0] & kHevcTypeMask) >> 1;

std::vector<size_t> nalu_start_offsets;

if (nal_type == H265::NaluType::kAP) {

// Skip the StapA header (StapA NAL type + length).

if (length_ <= kHevcApHeaderSize) {

RTC_LOG(LS_ERROR) << "AP header truncated.";

return false;

}

if (!ParseApStartOffsets(nalu_start, nalu_length, &nalu_start_offsets)) {

RTC_LOG(LS_ERROR) << "AP packet with incorrect NALU packet lengths.";

return false;

}

h265_header.packetization_type = kH265AP;

// nal_type = (payload_data[kHevcApHeaderSize] & kHevcTypeMask) >> 1;

} else {

h265_header.packetization_type = kH265SingleNalu;

nalu_start_offsets.push_back(0);

}

h265_header.nalu_type = nal_type;

parsed_payload->video_header().frame_type = VideoFrameType::kVideoFrameDelta;

nalu_start_offsets.push_back(length_ + kHevcLengthFieldSize); // End offset.

for (size_t i = 0; i < nalu_start_offsets.size() - 1; ++i) {

size_t start_offset = nalu_start_offsets[i];

// End offset is actually start offset for next unit, excluding length field

// so remove that from this units length.

size_t end_offset = nalu_start_offsets[i + 1] - kHevcLengthFieldSize;

if (end_offset - start_offset < kHevcNalHeaderSize) { // Same as H.264.

RTC_LOG(LS_ERROR) << "AP packet too short";

return false;

}

H265NaluInfo nalu;

nalu.type = (payload_data[start_offset] & kHevcTypeMask) >> 1;

nalu.vps_id = -1;

nalu.sps_id = -1;

nalu.pps_id = -1;

start_offset += kHevcNalHeaderSize;

switch (nalu.type) {

case H265::NaluType::kVps: {

absl::optional<H265VpsParser::VpsState> vps = H265VpsParser::ParseVps(

&payload_data[start_offset], end_offset - start_offset);

if (vps) {

nalu.vps_id = vps->id;

} else {

RTC_LOG(LS_WARNING) << "Failed to parse VPS id from VPS slice.";

}

break;

}

case H265::NaluType::kSps: {

// Check if VUI is present in SPS and if it needs to be modified to

// avoid excessive decoder latency.

// Copy any previous data first (likely just the first header).

std::unique_ptr<rtc::Buffer> output_buffer(new rtc::Buffer());

if (start_offset)

output_buffer->AppendData(payload_data, start_offset);

absl::optional<H265SpsParser::SpsState> sps = H265SpsParser::ParseSps(

&payload_data[start_offset], end_offset - start_offset);

if (sps) {

parsed_payload->video_header().width = sps->width;

parsed_payload->video_header().height = sps->height;

nalu.sps_id = sps->id;

nalu.vps_id = sps->vps_id;

} else {

RTC_LOG(LS_WARNING)

<< "Failed to parse SPS and VPS id from SPS slice.";

}

parsed_payload->video_header().frame_type = VideoFrameType::kVideoFrameKey;

break;

}

case H265::NaluType::kPps: {

uint32_t pps_id;

uint32_t sps_id;

if (H265PpsParser::ParsePpsIds(&payload_data[start_offset],

end_offset - start_offset, &pps_id,

&sps_id)) {

nalu.pps_id = pps_id;

nalu.sps_id = sps_id;

} else {

RTC_LOG(LS_WARNING)

<< "Failed to parse PPS id and SPS id from PPS slice.";

}

break;

}

case H265::NaluType::kIdrWRadl:

case H265::NaluType::kIdrNLp:

case H265::NaluType::kCra:

parsed_payload->video_header().frame_type = VideoFrameType::kVideoFrameKey;

ABSL_FALLTHROUGH_INTENDED;

case H265::NaluType::kTrailN:

case H265::NaluType::kTrailR: {

absl::optional<uint32_t> pps_id =

H265PpsParser::ParsePpsIdFromSliceSegmentLayerRbsp(

&payload_data[start_offset], end_offset - start_offset,

nalu.type);

if (pps_id) {

nalu.pps_id = *pps_id;

} else {

RTC_LOG(LS_WARNING) << "Failed to parse PPS id from slice of type: "

<< static_cast<int>(nalu.type);

}

break;

}

// Slices below don't contain SPS or PPS ids.

case H265::NaluType::kAud:

case H265::NaluType::kTsaN:

case H265::NaluType::kTsaR:

case H265::NaluType::kStsaN:

case H265::NaluType::kStsaR:

case H265::NaluType::kRadlN:

case H265::NaluType::kRadlR:

case H265::NaluType::kBlaWLp:

case H265::NaluType::kBlaWRadl:

case H265::NaluType::kPrefixSei:

case H265::NaluType::kSuffixSei:

break;

case H265::NaluType::kAP:

case H265::NaluType::kFU:

RTC_LOG(LS_WARNING) << "Unexpected AP or FU received.";

return false;

}

if (h265_header.nalus_length == kMaxNalusPerPacket) {

RTC_LOG(LS_WARNING)

<< "Received packet containing more than " << kMaxNalusPerPacket

<< " NAL units. Will not keep track sps and pps ids for all of them.";

} else {

h265_header.nalus[h265_header.nalus_length++] = nalu;

}

}

return true;

}

bool VideoRtpDepacketizerH265::ParseFuNalu(

ParsedPayload* parsed_payload,

const uint8_t* payload_data) {

if (length_ < kHevcFuHeaderSize + kHevcNalHeaderSize) {

RTC_LOG(LS_ERROR) << "FU NAL units truncated.";

return false;

}

uint8_t f = payload_data[0] & kHevcFBit;

uint8_t layer_id_h = payload_data[0] & kHevcLayerIDHMask;

uint8_t layer_id_l_unshifted = payload_data[1] & kHevcLayerIDLMask;

uint8_t tid = payload_data[1] & kHevcTIDMask;

uint8_t original_nal_type = payload_data[2] & kHevcTypeMaskInFuHeader;

bool first_fragment = payload_data[2] & kHevcSBit;

H265NaluInfo nalu;

nalu.type = original_nal_type;

nalu.vps_id = -1;

nalu.sps_id = -1;

nalu.pps_id = -1;

if (first_fragment) {

offset_ = 1;

length_ -= 1;

absl::optional<uint32_t> pps_id =

H265PpsParser::ParsePpsIdFromSliceSegmentLayerRbsp(

payload_data + kHevcNalHeaderSize + kHevcFuHeaderSize,

length_ - kHevcFuHeaderSize, nalu.type);

if (pps_id) {

nalu.pps_id = *pps_id;

} else {

RTC_LOG(LS_WARNING)

<< "Failed to parse PPS from first fragment of FU NAL "

"unit with original type: "

<< static_cast<int>(nalu.type);

}

uint8_t* payload = const_cast<uint8_t*>(payload_data + offset_);

payload[0] = f | original_nal_type << 1 | layer_id_h;

payload[1] = layer_id_l_unshifted | tid;

} else {

offset_ = kHevcNalHeaderSize + kHevcFuHeaderSize;

length_ -= (kHevcNalHeaderSize + kHevcFuHeaderSize);

}

if (original_nal_type == H265::NaluType::kIdrWRadl

|| original_nal_type == H265::NaluType::kIdrNLp

|| original_nal_type == H265::NaluType::kCra) {

parsed_payload->video_header().frame_type = VideoFrameType::kVideoFrameKey;

} else {

parsed_payload->video_header().frame_type = VideoFrameType::kVideoFrameDelta;

}

parsed_payload->video_header().width = 0;

parsed_payload->video_header().height = 0;

parsed_payload->video_header().codec = kVideoCodecH265;

parsed_payload->video_header().is_first_packet_in_frame = first_fragment;

auto& h265_header = absl::get<RTPVideoHeaderH265>(

parsed_payload->video_header().video_type_header);

h265_header.packetization_type = kH265FU;

h265_header.nalu_type = original_nal_type;

if (first_fragment) {

h265_header.nalus[h265_header.nalus_length] = nalu;

h265_header.nalus_length = 1;

}

return true;

}

absl::optional<VideoRtpDepacketizer::ParsedRtpPayload>

VideoRtpDepacketizerH265::Parse(rtc::CopyOnWriteBuffer rtp_payload) {

// borrowed from https://webrtc.googlesource.com/src/+/

// 07b17df771af20a6dd98b795592acc62a623c56f

// /modules/rtp_rtcp/source/create_video_rtp_depacketizer.cc

ParsedPayload parsed_payload;

if (!Parse(&parsed_payload, rtp_payload.cdata(), rtp_payload.size())) {

return absl::nullopt;

}

absl::optional<ParsedRtpPayload> result(absl::in_place);

result->video_header = parsed_payload.video;

result->video_payload.SetData(parsed_payload.payload,

parsed_payload.payload_length);

return result;

}

} // namespace webrtc- 将新增4个文件添加到ninja中参与构建,并通过rtc_use_h265打开H265开关:

if (rtc_enable_bwe_test_logging) {

defines = [ "BWE_TEST_LOGGING_COMPILE_TIME_ENABLE=1" ]

} else {

defines = [ "BWE_TEST_LOGGING_COMPILE_TIME_ENABLE=0" ]

}

if (rtc_use_h265) {

sources += [

"source/rtp_format_h265.cc",

"source/rtp_format_h265.h",

"source/video_rtp_depacketizer_h265.cc",

"source/video_rtp_depacketizer_h265.h",

]

}

if (!rtc_use_h265) {

defines += ["DISABLE_H265"]

}解包支持

- 增加H265解包支持

#include "modules/video_coding/codecs/h264/include/h264_globals.h"

#ifndef DISABLE_H265

#include "modules/video_coding/codecs/h265/include/h265_globals.h"

#endif

...

#ifndef DISABLE_H265

struct CodecSpecificInfoH265 {

H265PacketizationMode packetization_mode;

bool idr_frame;

};

#endif

union CodecSpecificInfoUnion {

CodecSpecificInfoVP8 VP8;

CodecSpecificInfoVP9 VP9;

CodecSpecificInfoH264 H264;

#ifndef DISABLE_H265

CodecSpecificInfoH265 H265;

#endif

};

static_assert(std::is_pod<CodecSpecificInfoUnion>::value, "");- 设置codec类型

void VCMEncodedFrame::CopyCodecSpecific(const RTPVideoHeader* header) {

...

#ifndef DISABLE_H265

case kVideoCodecH265: {

_codecSpecificInfo.codecType = kVideoCodecH265;

break;

}

#endif

default: {

_codecSpecificInfo.codecType = kVideoCodecGeneric;

break;

}

}- 新增tracker 解析vps/sps/pps信息,参考h264_sps_pps_tracker

#include "modules/video_coding/h265_vps_sps_pps_tracker.h"

#include <string>

#include <utility>

#include "common_video/h264/h264_common.h"

#include "common_video/h265/h265_common.h"

#include "common_video/h265/h265_pps_parser.h"

#include "common_video/h265/h265_sps_parser.h"

#include "common_video/h265/h265_vps_parser.h"

#include "modules/video_coding/codecs/h264/include/h264_globals.h"

#include "modules/video_coding/codecs/h265/include/h265_globals.h"

#include "modules/video_coding/frame_object.h"

#include "modules/video_coding/packet_buffer.h"

#include "rtc_base/checks.h"

#include "rtc_base/logging.h"

namespace webrtc {

namespace video_coding {

namespace {

const uint8_t start_code_h265[] = {0, 0, 0, 1};

} // namespace

H265VpsSpsPpsTracker::FixedBitstream H265VpsSpsPpsTracker::CopyAndFixBitstream(

rtc::ArrayView<const uint8_t> bitstream,

RTPVideoHeader* video_header) {

RTC_DCHECK(video_header);

RTC_DCHECK(video_header->codec == kVideoCodecH265);

auto& h265_header =

absl::get<RTPVideoHeaderH265>(video_header->video_type_header);

bool append_vps_sps_pps = false;

auto vps = vps_data_.end();

auto sps = sps_data_.end();

auto pps = pps_data_.end();

for (size_t i = 0; i < h265_header.nalus_length; ++i) {

const H265NaluInfo& nalu = h265_header.nalus[i];

switch (nalu.type) {

case H265::NaluType::kVps: {

vps_data_[nalu.vps_id].size = 0;

break;

}

case H265::NaluType::kSps: {

sps_data_[nalu.sps_id].vps_id = nalu.vps_id;

sps_data_[nalu.sps_id].width = video_header->width;

sps_data_[nalu.sps_id].height = video_header->height;

break;

}

case H265::NaluType::kPps: {

pps_data_[nalu.pps_id].sps_id = nalu.sps_id;

break;

}

case H265::NaluType::kIdrWRadl:

case H265::NaluType::kIdrNLp:

case H265::NaluType::kCra: {

// If this is the first packet of an IDR, make sure we have the required

// SPS/PPS and also calculate how much extra space we need in the buffer

// to prepend the SPS/PPS to the bitstream with start codes.

if (video_header->is_first_packet_in_frame) {

if (nalu.pps_id == -1) {

RTC_LOG(LS_WARNING) << "No PPS id in IDR nalu.";

return {kRequestKeyframe};

}

pps = pps_data_.find(nalu.pps_id);

if (pps == pps_data_.end()) {

RTC_LOG(LS_WARNING)

<< "No PPS with id << " << nalu.pps_id << " received";

return {kRequestKeyframe};

}

sps = sps_data_.find(pps->second.sps_id);

if (sps == sps_data_.end()) {

RTC_LOG(LS_WARNING)

<< "No SPS with id << " << pps->second.sps_id << " received";

return {kRequestKeyframe};

}

vps = vps_data_.find(sps->second.vps_id);

if (vps == vps_data_.end()) {

RTC_LOG(LS_WARNING)

<< "No VPS with id << " << sps->second.vps_id << " received";

return {kRequestKeyframe};

}

// Since the first packet of every keyframe should have its width and

// height set we set it here in the case of it being supplied out of

// band.

video_header->width = sps->second.width;

video_header->height = sps->second.height;

// If the VPS/SPS/PPS was supplied out of band then we will have saved

// the actual bitstream in |data|.

// This branch is not verified.

if (vps->second.data && sps->second.data && pps->second.data) {

RTC_DCHECK_GT(vps->second.size, 0);

RTC_DCHECK_GT(sps->second.size, 0);

RTC_DCHECK_GT(pps->second.size, 0);

append_vps_sps_pps = true;

}

}

break;

}

default:

break;

}

}

RTC_CHECK(!append_vps_sps_pps ||

(sps != sps_data_.end() && pps != pps_data_.end()));

// Calculate how much space we need for the rest of the bitstream.

size_t required_size = 0;

if (append_vps_sps_pps) {

required_size += vps->second.size + sizeof(start_code_h265);

required_size += sps->second.size + sizeof(start_code_h265);

required_size += pps->second.size + sizeof(start_code_h265);

}

if (h265_header.packetization_type == kH265AP) {

const uint8_t* nalu_ptr = bitstream.data() + 1;

while (nalu_ptr < bitstream.data() + bitstream.size()) {

RTC_DCHECK(video_header->is_first_packet_in_frame);

required_size += sizeof(start_code_h265);

// The first two bytes describe the length of a segment.

uint16_t segment_length = nalu_ptr[0] << 8 | nalu_ptr[1];

nalu_ptr += 2;

required_size += segment_length;

nalu_ptr += segment_length;

}

} else {

if (video_header->is_first_packet_in_frame)

required_size += sizeof(start_code_h265);

required_size += bitstream.size();

}

// Then we copy to the new buffer.

H265VpsSpsPpsTracker::FixedBitstream fixed;

fixed.bitstream.EnsureCapacity(required_size);

if (append_vps_sps_pps) {

// Insert VPS.

fixed.bitstream.AppendData(start_code_h265);

fixed.bitstream.AppendData(vps->second.data.get(), vps->second.size);

// Insert SPS.

fixed.bitstream.AppendData(start_code_h265);

fixed.bitstream.AppendData(sps->second.data.get(), sps->second.size);

// Insert PPS.

fixed.bitstream.AppendData(start_code_h265);

fixed.bitstream.AppendData(pps->second.data.get(), pps->second.size);

// Update codec header to reflect the newly added SPS and PPS.

H265NaluInfo vps_info;

vps_info.type = H265::NaluType::kVps;

vps_info.vps_id = vps->first;

vps_info.sps_id = -1;

vps_info.pps_id = -1;

H265NaluInfo sps_info;

sps_info.type = H265::NaluType::kSps;

sps_info.vps_id = vps->first;

sps_info.sps_id = sps->first;

sps_info.pps_id = -1;

H265NaluInfo pps_info;

pps_info.type = H265::NaluType::kPps;

pps_info.vps_id = vps->first;

pps_info.sps_id = sps->first;

pps_info.pps_id = pps->first;

if (h265_header.nalus_length + 3 <= kMaxNalusPerPacket) {

h265_header.nalus[h265_header.nalus_length++] = vps_info;

h265_header.nalus[h265_header.nalus_length++] = sps_info;

h265_header.nalus[h265_header.nalus_length++] = pps_info;

} else {

RTC_LOG(LS_WARNING) << "Not enough space in H.265 codec header to insert "

"SPS/PPS provided out-of-band.";

}

}

// Copy the rest of the bitstream and insert start codes.

if (h265_header.packetization_type == kH265AP) {

const uint8_t* nalu_ptr = bitstream.data() + 1;

while (nalu_ptr < bitstream.data() + bitstream.size()) {

fixed.bitstream.AppendData(start_code_h265);

// The first two bytes describe the length of a segment.

uint16_t segment_length = nalu_ptr[0] << 8 | nalu_ptr[1];

nalu_ptr += 2;

size_t copy_end = nalu_ptr - bitstream.data() + segment_length;

if (copy_end > bitstream.size()) {

return {kDrop};

}

fixed.bitstream.AppendData(nalu_ptr, segment_length);

nalu_ptr += segment_length;

}

} else {

if (video_header->is_first_packet_in_frame) {

fixed.bitstream.AppendData(start_code_h265);

}

fixed.bitstream.AppendData(bitstream.data(), bitstream.size());

}

fixed.action = kInsert;

return fixed;

}

void H265VpsSpsPpsTracker::InsertVpsSpsPpsNalus(

const std::vector<uint8_t>& vps,

const std::vector<uint8_t>& sps,

const std::vector<uint8_t>& pps) {

constexpr size_t kNaluHeaderOffset = 1;

if (vps.size() < kNaluHeaderOffset) {

RTC_LOG(LS_WARNING) << "VPS size " << vps.size() << " is smaller than "

<< kNaluHeaderOffset;

return;

}

if ((vps[0] & 0x7e) >> 1 != H265::NaluType::kSps) {

RTC_LOG(LS_WARNING) << "SPS Nalu header missing";

return;

}

if (sps.size() < kNaluHeaderOffset) {

RTC_LOG(LS_WARNING) << "SPS size " << sps.size() << " is smaller than "

<< kNaluHeaderOffset;

return;

}

if ((sps[0] & 0x7e) >> 1 != H265::NaluType::kSps) {

RTC_LOG(LS_WARNING) << "SPS Nalu header missing";

return;

}

if (pps.size() < kNaluHeaderOffset) {

RTC_LOG(LS_WARNING) << "PPS size " << pps.size() << " is smaller than "

<< kNaluHeaderOffset;

return;

}

if ((pps[0] & 0x7e) >> 1 != H265::NaluType::kPps) {

RTC_LOG(LS_WARNING) << "SPS Nalu header missing";

return;

}

absl::optional<H265VpsParser::VpsState> parsed_vps = H265VpsParser::ParseVps(

vps.data() + kNaluHeaderOffset, vps.size() - kNaluHeaderOffset);

absl::optional<H265SpsParser::SpsState> parsed_sps = H265SpsParser::ParseSps(

sps.data() + kNaluHeaderOffset, sps.size() - kNaluHeaderOffset);

absl::optional<H265PpsParser::PpsState> parsed_pps = H265PpsParser::ParsePps(

pps.data() + kNaluHeaderOffset, pps.size() - kNaluHeaderOffset);

if (!parsed_vps) {

RTC_LOG(LS_WARNING) << "Failed to parse VPS.";

}

if (!parsed_sps) {

RTC_LOG(LS_WARNING) << "Failed to parse SPS.";

}

if (!parsed_pps) {

RTC_LOG(LS_WARNING) << "Failed to parse PPS.";

}

if (!parsed_vps || !parsed_pps || !parsed_sps) {

return;

}

VpsInfo vps_info;

vps_info.size = vps.size();

uint8_t* vps_data = new uint8_t[vps_info.size];

memcpy(vps_data, vps.data(), vps_info.size);

vps_info.data.reset(vps_data);

vps_data_[parsed_vps->id] = std::move(vps_info);

SpsInfo sps_info;

sps_info.size = sps.size();

sps_info.width = parsed_sps->width;

sps_info.height = parsed_sps->height;

sps_info.vps_id = parsed_sps->vps_id;

uint8_t* sps_data = new uint8_t[sps_info.size];

memcpy(sps_data, sps.data(), sps_info.size);

sps_info.data.reset(sps_data);

sps_data_[parsed_sps->id] = std::move(sps_info);

PpsInfo pps_info;

pps_info.size = pps.size();

pps_info.sps_id = parsed_pps->sps_id;

uint8_t* pps_data = new uint8_t[pps_info.size];

memcpy(pps_data, pps.data(), pps_info.size);

pps_info.data.reset(pps_data);

pps_data_[parsed_pps->id] = std::move(pps_info);

RTC_LOG(LS_INFO) << "Inserted SPS id " << parsed_sps->id << " and PPS id "

<< parsed_pps->id << " (referencing SPS "

<< parsed_pps->sps_id << ")";

}

} // namespace video_coding

} // namespace webrtc#ifndef MODULES_VIDEO_CODING_H265_VPS_SPS_PPS_TRACKER_H_

#define MODULES_VIDEO_CODING_H265_VPS_SPS_PPS_TRACKER_H_

#include <cstdint>

#include <map>

#include <memory>

#include <vector>

#include "api/array_view.h"

#include "modules/rtp_rtcp/source/rtp_video_header.h"

#include "rtc_base/copy_on_write_buffer.h"

namespace webrtc {

namespace video_coding {

class H265VpsSpsPpsTracker {

public:

enum PacketAction { kInsert, kDrop, kRequestKeyframe };

struct FixedBitstream {

PacketAction action;

rtc::CopyOnWriteBuffer bitstream;

};

// Returns fixed bitstream and modifies |video_header|.

FixedBitstream CopyAndFixBitstream(rtc::ArrayView<const uint8_t> bitstream,

RTPVideoHeader* video_header);

void InsertVpsSpsPpsNalus(const std::vector<uint8_t>& vps,

const std::vector<uint8_t>& sps,

const std::vector<uint8_t>& pps);

private:

struct VpsInfo {

size_t size = 0;

std::unique_ptr<uint8_t[]> data;

};

struct PpsInfo {

int sps_id = -1;

size_t size = 0;

std::unique_ptr<uint8_t[]> data;

};

struct SpsInfo {

int vps_id = -1;

size_t size = 0;

int width = -1;

int height = -1;

std::unique_ptr<uint8_t[]> data;

};

std::map<uint32_t, VpsInfo> vps_data_;

std::map<uint32_t, PpsInfo> pps_data_;

std::map<uint32_t, SpsInfo> sps_data_;

};

} // namespace video_coding

} // namespace webrtc

#endif // MODULES_VIDEO_CODING_H264_SPS_PPS_TRACKER_H_

复制代码- 在网络抖动缓存信息中新增枚举:

enum { kH264StartCodeLengthBytes = 4 };

#ifndef DISABLE_H265

enum { kH265StartCodeLengthBytes = 4 };

#endif- PacketBufferRTP包缓冲区增加H265支持:

#include "common_video/h264/h264_common.h"

#ifndef DISABLE_H265

#include "common_video/h265/h265_common.h"

#endif

#include "modules/rtp_rtcp/source/rtp_header_extensions.h"

#include "modules/rtp_rtcp/source/rtp_packet_received.h"

#include "modules/rtp_rtcp/source/rtp_video_header.h"

#include "modules/video_coding/codecs/h264/include/h264_globals.h"

#ifndef DISABLE_H265

#include "modules/video_coding/codecs/h265/include/h265_globals.h"

#endif

#include "rtc_base/checks.h"

...

std::vector<std::unique_ptr<PacketBuffer::Packet>> PacketBuffer::FindFrames(

uint16_t seq_num) {

std::vector<std::unique_ptr<PacketBuffer::Packet>> found_frames;

for (size_t i = 0; i < buffer_.size() && PotentialNewFrame(seq_num); ++i) {

...

bool is_h264_keyframe = false;

bool is_h265 = false;

#ifndef DISABLE_H265

is_h265 = buffer_[start_index]->codec() == kVideoCodecH265;

bool has_h265_sps = false;

bool has_h265_pps = false;

bool has_h265_idr = false;

bool is_h265_keyframe = false;

#endif

int idr_width = -1;

int idr_height = -1;

while (true) {

++tested_packets;

if (!is_h264 && !is_h265 && buffer_[start_index]->is_first_packet_in_frame())

break;

if (is_h264) {

...

}

#ifndef DISABLE_H265

if (is_h265 && !is_h265_keyframe) {

const auto* h265_header = absl::get_if<RTPVideoHeaderH265>(

&buffer_[start_index]->video_header.video_type_header);

if (!h265_header || h265_header->nalus_length >= kMaxNalusPerPacket)

return found_frames;

for (size_t j = 0; j < h265_header->nalus_length; ++j) {

if (h265_header->nalus[j].type == H265::NaluType::kSps) {

has_h265_sps = true;

} else if (h265_header->nalus[j].type == H265::NaluType::kPps) {

has_h265_pps = true;

} else if (h265_header->nalus[j].type == H265::NaluType::kIdrWRadl

|| h265_header->nalus[j].type == H265::NaluType::kIdrNLp

|| h265_header->nalus[j].type == H265::NaluType::kCra) {

has_h265_idr = true;

}

}

if ((has_h265_sps && has_h265_pps) || has_h265_idr) {

is_h265_keyframe = true;

// Store the resolution of key frame which is the packet with

// smallest index and valid resolution; typically its IDR or SPS

// packet; there may be packet preceeding this packet, IDR's

// resolution will be applied to them.

if (buffer_[start_index]->width() > 0 &&

buffer_[start_index]->height() > 0) {

idr_width = buffer_[start_index]->width();

idr_height = buffer_[start_index]->height();

}

}

}

#endif

...

if (is_h264) {

...

}

#ifndef DISABLE_H265

if (is_h265) {

// Warn if this is an unsafe frame.

if (has_h265_idr && (!has_h265_sps || !has_h265_pps)) {

RTC_LOG(LS_WARNING)

<< "Received H.265-IDR frame "

<< "(SPS: " << has_h265_sps << ", PPS: " << has_h265_pps

<< "). Treating as delta frame since "

<< "WebRTC-SpsPpsIdrIsH265Keyframe is always enabled.";

}

// Now that we have decided whether to treat this frame as a key frame

// or delta frame in the frame buffer, we update the field that

// determines if the RtpFrameObject is a key frame or delta frame.

const size_t first_packet_index = start_seq_num % buffer_.size();

if (is_h265_keyframe) {

buffer_[first_packet_index]->video_header.frame_type =

VideoFrameType::kVideoFrameKey;

if (idr_width > 0 && idr_height > 0) {

// IDR frame was finalized and we have the correct resolution for

// IDR; update first packet to have same resolution as IDR.

buffer_[first_packet_index]->video_header.width = idr_width;

buffer_[first_packet_index]->video_header.height =

idr_height;

}

} else {

buffer_[first_packet_index]->video_header.frame_type =

VideoFrameType::kVideoFrameDelta;

}

// If this is not a key frame, make sure there are no gaps in the

// packet sequence numbers up until this point.

if (!is_h265_keyframe && missing_packets_.upper_bound(start_seq_num) !=

missing_packets_.begin()) {

return found_frames;

}

}

#endif

...

}

return found_frames;

}- packet insetStartCode增加H265支持:

completeNALU(kNaluIncomplete),

#ifndef DISABLE_H265

insertStartCode((videoHeader.codec == kVideoCodecH264 || videoHeader.codec == kVideoCodecH265) &&

videoHeader.is_first_packet_in_frame),

#else

insertStartCode(videoHeader.codec == kVideoCodecH264 &&

videoHeader.is_first_packet_in_frame),

#endif

video_header(videoHeader),- 支持H265包拼接,新增函数GetH265NaluInfos:

+ #ifndef DISABLE_H265

+ std::vector<H265NaluInfo> VCMSessionInfo::GetH265NaluInfos() const {

+ if (packets_.empty() || packets_.front().video_header.codec != kVideoCodecH265)

+ return std::vector<H265NaluInfo>();

+ std::vector<H265NaluInfo> nalu_infos;

+ for (const VCMPacket& packet : packets_) {

+ const auto& h265 =

+ absl::get<RTPVideoHeaderH265>(packet.video_header.video_type_header);

+ for (size_t i = 0; i < h265.nalus_length; ++i) {

+ nalu_infos.push_back(h265.nalus[i]);

+ }

+ }

+ return nalu_infos;

+ }

+ #endif

...

size_t VCMSessionInfo::InsertBuffer(uint8_t* frame_buffer,

PacketIterator packet_it) {

...

const size_t kH264NALHeaderLengthInBytes = 1;

+ #ifndef DISABLE_H265

+ const size_t kH265NALHeaderLengthInBytes = 2;

+ const auto* h265 =

+ absl::get_if<RTPVideoHeaderH265>(&packet.video_header.video_type_header);

+ #endif

...

return packet.sizeBytes;

+ #ifndef DISABLE_H265

+ } else if (h265 && h265->packetization_type == kH265AP) {

+ // Similar to H264, for H265 aggregation packets, we rely on jitter buffer

+ // to remove the two length bytes between each NAL unit, and potentially add

+ // start codes.

+ size_t required_length = 0;

+ const uint8_t* nalu_ptr =

+ packet_buffer + kH265NALHeaderLengthInBytes; // skip payloadhdr

+ while (nalu_ptr < packet_buffer + packet.sizeBytes) {

+ size_t length = BufferToUWord16(nalu_ptr);

+ required_length +=

+ length + (packet.insertStartCode ? kH265StartCodeLengthBytes : 0);

+ nalu_ptr += kLengthFieldLength + length;

+ }

+ ShiftSubsequentPackets(packet_it, required_length);

+ nalu_ptr = packet_buffer + kH265NALHeaderLengthInBytes;

+ uint8_t* frame_buffer_ptr = frame_buffer + offset;

+ while (nalu_ptr < packet_buffer + packet.sizeBytes) {

+ size_t length = BufferToUWord16(nalu_ptr);

+ nalu_ptr += kLengthFieldLength;

+ // since H265 shares the same start code as H264, use the same Insert

+ // function to handle start code.

+ frame_buffer_ptr += Insert(nalu_ptr, length, packet.insertStartCode,

+ const_cast<uint8_t*>(frame_buffer_ptr));

+ nalu_ptr += length;

+ }

+ packet.sizeBytes = required_length;

+ return packet.sizeBytes;

+ #endif

}

ShiftSubsequentPackets(

packet_it, packet.sizeBytes +

(packet.insertStartCode ? kH264StartCodeLengthBytes : 0));

packet.sizeBytes =

Insert(packet_buffer, packet.sizeBytes, packet.insertStartCode,

const_cast<uint8_t*>(packet.dataPtr));

return packet.sizeBytes;

}

...

int VCMSessionInfo::InsertPacket(const VCMPacket& packet,

uint8_t* frame_buffer,

const FrameData& frame_data) {

...

+ #ifndef DISABLE_H265

+ } else if (packet.codec() == kVideoCodecH265) {

+ frame_type_ = packet.video_header.frame_type;

+ if (packet.is_first_packet_in_frame() &&

+ (first_packet_seq_num_ == -1 ||

+ IsNewerSequenceNumber(first_packet_seq_num_, packet.seqNum))) {

+ first_packet_seq_num_ = packet.seqNum;

+ }

+ if (packet.markerBit &&

+ (last_packet_seq_num_ == -1 ||

+ IsNewerSequenceNumber(packet.seqNum, last_packet_seq_num_))) {

+ last_packet_seq_num_ = packet.seqNum;

+ }

+ #endif

...

return static_cast<int>(returnLength);

} std::vector<NaluInfo> GetNaluInfos() const;

#ifndef DISABLE_H265

std::vector<H265NaluInfo> GetH265NaluInfos() const;

#endif- 将新增2个文件添加到ninja中参与构建:

if (rtc_use_h265) {

sources += [

"h265_vps_sps_pps_tracker.cc",

"h265_vps_sps_pps_tracker.h",

]

}RTP相关支持

- 配置最小码率枚举,避免编译不通过:

absl::optional<DataRate> GetExperimentalMinVideoBitrate(VideoCodecType type) {

...

#ifndef DISABLE_H265

case kVideoCodecH265:

#endif

case kVideoCodecGeneric:

...

}- 使rtp载荷增加H265支持:

void RtpVideoStreamReceiver::OnReceivedPayloadData(

rtc::CopyOnWriteBuffer codec_payload,

const RtpPacketReceived& rtp_packet,

const RTPVideoHeader& video) {

...

#ifndef DISABLE_H265

} else if (packet->codec() == kVideoCodecH265) {

// Only when we start to receive packets will we know what payload type

// that will be used. When we know the payload type insert the correct

// sps/pps into the tracker.

if (packet->payload_type != last_payload_type_) {

last_payload_type_ = packet->payload_type;

InsertSpsPpsIntoTracker(packet->payload_type);

}

video_coding::H265VpsSpsPpsTracker::FixedBitstream fixed =

h265_tracker_.CopyAndFixBitstream(

rtc::MakeArrayView(codec_payload.cdata(), codec_payload.size()),

&packet->video_header);

switch (fixed.action) {

case video_coding::H265VpsSpsPpsTracker::kRequestKeyframe:

rtcp_feedback_buffer_.RequestKeyFrame();

rtcp_feedback_buffer_.SendBufferedRtcpFeedback();

ABSL_FALLTHROUGH_INTENDED;

case video_coding::H265VpsSpsPpsTracker::kDrop:

return;

case video_coding::H265VpsSpsPpsTracker::kInsert:

packet->video_payload = std::move(fixed.bitstream);

break;

}

#endif

} else {

packet->video_payload = std::move(codec_payload);

}

}#ifndef DISABLE_H265

#include "modules/video_coding/h265_vps_sps_pps_tracker.h"

#endif

...

std::map<uint8_t, std::unique_ptr<VideoRtpDepacketizer>> payload_type_map_;

#ifndef DISABLE_H265

video_coding::H265VpsSpsPpsTracker h265_tracker_;

#endifvoid RtpVideoStreamReceiver2::OnReceivedPayloadData(

rtc::CopyOnWriteBuffer codec_payload,

const RtpPacketReceived& rtp_packet,

const RTPVideoHeader& video) {

...

#ifndef DISABLE_H265

} else if (packet->codec() == kVideoCodecH265) {

// Only when we start to receive packets will we know what payload type

// that will be used. When we know the payload type insert the correct

// sps/pps into the tracker.

if (packet->payload_type != last_payload_type_) {

last_payload_type_ = packet->payload_type;

InsertSpsPpsIntoTracker(packet->payload_type);

}

video_coding::H265VpsSpsPpsTracker::FixedBitstream fixed =

h265_tracker_.CopyAndFixBitstream(

rtc::MakeArrayView(codec_payload.cdata(), codec_payload.size()),

&packet->video_header);

switch (fixed.action) {

case video_coding::H265VpsSpsPpsTracker::kRequestKeyframe:

rtcp_feedback_buffer_.RequestKeyFrame();

rtcp_feedback_buffer_.SendBufferedRtcpFeedback();

ABSL_FALLTHROUGH_INTENDED;

case video_coding::H265VpsSpsPpsTracker::kDrop:

return;

case video_coding::H265VpsSpsPpsTracker::kInsert:

packet->video_payload = std::move(fixed.bitstream);

break;

}

#endif

} else {

packet->video_payload = std::move(codec_payload);

} #ifndef DISABLE_H265

#include "modules/video_coding/h265_vps_sps_pps_tracker.h"

#endif

...

std::map<uint8_t, std::unique_ptr<VideoRtpDepacketizer>> payload_type_map_

RTC_GUARDED_BY(worker_task_checker_);

#ifndef DISABLE_H265

video_coding::H265VpsSpsPpsTracker h265_tracker_;

#endif- 统计支持:

enum HistogramCodecType {

kVideoUnknown = 0,

kVideoVp8 = 1,

kVideoVp9 = 2,

kVideoH264 = 3,

#ifndef DISABLE_H265

kVideoH265 = 4,

#endif

kVideoMax = 64,

};

HistogramCodecType PayloadNameToHistogramCodecType(

const std::string& payload_name) {

VideoCodecType codecType = PayloadStringToCodecType(payload_name);

switch (codecType) {

case kVideoCodecVP8:

return kVideoVp8;

case kVideoCodecVP9:

return kVideoVp9;

case kVideoCodecH264:

return kVideoH264;

#ifndef DISABLE_H265

case kVideoCodecH265:

return kVideoH265;

#endif

default:

return kVideoUnknown;

}

}- 接收流解码器初始化支持:

VideoCodec CreateDecoderVideoCodec(const VideoReceiveStream::Decoder& decoder) {

VideoCodec codec;

memset(&codec, 0, sizeof(codec));

codec.codecType = PayloadStringToCodecType(decoder.video_format.name);

...

return associated_codec;

#ifndef DISABLE_H265

} else if (codec.codecType == kVideoCodecH265) {

*(codec.H265()) = VideoEncoder::GetDefaultH265Settings();

#endif

}

...

return codec;

}- 接收流编码器支持:

bool RequiresEncoderReset(const VideoCodec& prev_send_codec,

const VideoCodec& new_send_codec,

bool was_encode_called_since_last_initialization) {

...

case kVideoCodecH264:

if (new_send_codec.H264() != prev_send_codec.H264()) {

return true;

}

break;

#ifndef DISABLE_H265

case kVideoCodecH265:

if (new_send_codec.H265() != prev_send_codec.H265()) {

return true;

}

break;

#endif

}编解码器支持

- 视频数据中增加H265类型:

#include "api/video/video_codec_type.h"#ifndef DISABLE_H265

enum VideoCodecType {

// Java_cpp_enum.py does not allow ifdef in enum class,

// so we have to create two version of VideoCodecType here

kVideoCodecGeneric = 0,

kVideoCodecVP8,

kVideoCodecVP9,

kVideoCodecAV1,

kVideoCodecH264,

kVideoCodecH265,

kVideoCodecMultiplex,

};

#else

enum VideoCodecType {

// There are various memset(..., 0, ...) calls in the code that rely on

// kVideoCodecGeneric being zero.

kVideoCodecGeneric = 0,

kVideoCodecVP8,

kVideoCodecVP9,

kVideoCodecAV1,

kVideoCodecH264,

kVideoCodecMultiplex,

};

#endif- codec基本信息支持:

constexpr char kPayloadNameH264[] = "H264";

#ifndef DISABLE_H265

constexpr char kPayloadNameH265[] = "H265";

#endif

...

#ifndef DISABLE_H265

bool VideoCodecH265::operator==(const VideoCodecH265& other) const {

return (frameDroppingOn == other.frameDroppingOn &&

keyFrameInterval == other.keyFrameInterval &&

vpsLen == other.vpsLen && spsLen == other.spsLen &&

ppsLen == other.ppsLen &&

(spsLen == 0 || memcmp(spsData, other.spsData, spsLen) == 0) &&

(ppsLen == 0 || memcmp(ppsData, other.ppsData, ppsLen) == 0));

}

#endif

...

const VideoCodecH264& VideoCodec::H264() const {

RTC_DCHECK_EQ(codecType, kVideoCodecH264);

return codec_specific_.H264;

}

#ifndef DISABLE_H265

VideoCodecH265* VideoCodec::H265() {

RTC_DCHECK_EQ(codecType, kVideoCodecH265);

return &codec_specific_.H265;

}

const VideoCodecH265& VideoCodec::H265() const {

RTC_DCHECK_EQ(codecType, kVideoCodecH265);

return codec_specific_.H265;

}

#endif

const char* CodecTypeToPayloadString(VideoCodecType type) {

...

case kVideoCodecH264:

return kPayloadNameH264;

#ifndef DISABLE_H265

case kVideoCodecH265:

return kPayloadNameH265;

#endif

case kVideoCodecMultiplex:

return kPayloadNameMultiplex;

case kVideoCodecGeneric:

return kPayloadNameGeneric;

}

VideoCodecType PayloadStringToCodecType(const std::string& name) {

...

if (absl::EqualsIgnoreCase(name, kPayloadNameH264))

return kVideoCodecH264;

#ifndef DISABLE_H265

if (absl::EqualsIgnoreCase(name, kPayloadNameH265))

return kVideoCodecH265;

#endif

if (absl::EqualsIgnoreCase(name, kPayloadNameMultiplex))

return kVideoCodecMultiplex;

return kVideoCodecGeneric;

}#ifndef DISABLE_H265

struct VideoCodecH265 {

bool operator==(const VideoCodecH265& other) const;

bool operator!=(const VideoCodecH265& other) const {

return !(*this == other);

}

bool frameDroppingOn;

int keyFrameInterval;

const uint8_t* vpsData;

size_t vpsLen;

const uint8_t* spsData;

size_t spsLen;

const uint8_t* ppsData;

size_t ppsLen;

};

#endif

...

union VideoCodecUnion {

VideoCodecVP8 VP8;

VideoCodecVP9 VP9;

VideoCodecH264 H264;

#ifndef DISABLE_H265

VideoCodecH265 H265;

#endif

};

...

class RTC_EXPORT VideoCodec {

public:

...

#ifndef DISABLE_H265

VideoCodecH265* H265();

const VideoCodecH265& H265() const;

#endif

private:

// TODO(hta): Consider replacing the union with a pointer type.

// This will allow removing the VideoCodec* types from this file.

VideoCodecUnion codec_specific_;

}; - 解码器降级支持:

void VideoDecoderSoftwareFallbackWrapper::UpdateFallbackDecoderHistograms() {

switch (codec_settings_.codecType) {

...

case kVideoCodecH264:

RTC_HISTOGRAM_COUNTS_100000(kFallbackHistogramsUmaPrefix + "H264",

hw_decoded_frames_since_last_fallback_);

break;

#ifndef DISABLE_H265

case kVideoCodecH265:

RTC_HISTOGRAM_COUNTS_100000(kFallbackHistogramsUmaPrefix + "H265",

hw_decoded_frames_since_last_fallback_);

break;

#endif

case kVideoCodecMultiplex:

RTC_HISTOGRAM_COUNTS_100000(kFallbackHistogramsUmaPrefix + "Multiplex",

hw_decoded_frames_since_last_fallback_);

break;

}

}- 编码器配置:

void VideoEncoderConfig::EncoderSpecificSettings::FillEncoderSpecificSettings(

VideoCodec* codec) const {

if (codec->codecType == kVideoCodecH264) {

FillVideoCodecH264(codec->H264());

} else if (codec->codecType == kVideoCodecVP8) {

FillVideoCodecVp8(codec->VP8());

} else if (codec->codecType == kVideoCodecVP9) {

FillVideoCodecVp9(codec->VP9());

#ifndef DISABLE_H265

} else if (codec->codecType == kVideoCodecH265) {

FillVideoCodecH265(codec->H265());

#endif

} else {

RTC_NOTREACHED() << "Encoder specifics set/used for unknown codec type.";

}

}

#ifndef DISABLE_H265

void VideoEncoderConfig::EncoderSpecificSettings::FillVideoCodecH265(

VideoCodecH265* h265_settings) const {

RTC_NOTREACHED();

}

#endif

#ifndef DISABLE_H265

VideoEncoderConfig::H265EncoderSpecificSettings::H265EncoderSpecificSettings(

const VideoCodecH265& specifics)

: specifics_(specifics) {}

void VideoEncoderConfig::H265EncoderSpecificSettings::FillVideoCodecH265(

VideoCodecH265* h265_settings) const {

*h265_settings = specifics_;

}

#endif class EncoderSpecificSettings : public rtc::RefCountInterface {

public:

...

virtual void FillVideoCodecH264(VideoCodecH264* h264_settings) const;

#ifndef DISABLE_H265

virtual void FillVideoCodecH265(VideoCodecH265* h265_settings) const;

#endif

private:

~EncoderSpecificSettings() override {}

friend class VideoEncoderConfig;

};

#ifndef DISABLE_H265

class H265EncoderSpecificSettings : public EncoderSpecificSettings {

public:

explicit H265EncoderSpecificSettings(const VideoCodecH265& specifics);

void FillVideoCodecH265(VideoCodecH265* h265_settings) const override;

private:

VideoCodecH265 specifics_;

};

#endif#ifndef DISABLE_H265

VideoCodecH265 VideoEncoder::GetDefaultH265Settings() {

VideoCodecH265 h265_settings;

memset(&h265_settings, 0, sizeof(h265_settings));

// h265_settings.profile = kProfileBase;

h265_settings.frameDroppingOn = true;

h265_settings.keyFrameInterval = 3000;

h265_settings.spsData = nullptr;

h265_settings.spsLen = 0;

h265_settings.ppsData = nullptr;

h265_settings.ppsLen = 0;

return h265_settings;

}

#endif static VideoCodecH264 GetDefaultH264Settings();

#ifndef DISABLE_H265

static VideoCodecH265 GetDefaultH265Settings();

#endif- 载荷配置:

void PopulateRtpWithCodecSpecifics(const CodecSpecificInfo& info,

absl::optional<int> spatial_index,

RTPVideoHeader* rtp) {

switch (info.codecType) {

...

case kVideoCodecH264: {

auto& h264_header = rtp->video_type_header.emplace<RTPVideoHeaderH264>();

h264_header.packetization_mode =

info.codecSpecific.H264.packetization_mode;

rtp->simulcastIdx = spatial_index.value_or(0);

return;

}

#ifndef DISABLE_H265

case kVideoCodecH265: {

auto h265_header = rtp->video_type_header.emplace<RTPVideoHeaderH265>();

h265_header.packetization_mode =

info.codecSpecific.H265.packetization_mode;

}

return;

#endif

...

}

}

void RtpPayloadParams::SetGeneric(const CodecSpecificInfo* codec_specific_info,

int64_t frame_id,

bool is_keyframe,

RTPVideoHeader* rtp_video_header) {

...

switch (rtp_video_header->codec) {

...

case VideoCodecType::kVideoCodecH264:

if (codec_specific_info) {

H264ToGeneric(codec_specific_info->codecSpecific.H264, frame_id,

is_keyframe, rtp_video_header);

}

return;

#ifndef DISABLE_H265

case VideoCodecType::kVideoCodecH265:

#endif

case VideoCodecType::kVideoCodecMultiplex:

return;

}

}参考:

作者:8MilesRD

链接:https://juejin.cn/post/7155291649949171719

版权声明:本文内容转自互联网,本文观点仅代表作者本人。本站仅提供信息存储空间服务,所有权归原作者所有。如发现本站有涉嫌抄袭侵权/违法违规的内容, 请发送邮件至1393616908@qq.com 举报,一经查实,本站将立刻删除。