在抽出空余的时间,经过两周编写,终于把服务端与web端代码写好。后面再优化一下,应该满足基本使用。基本原理是服务端通过SCTP封包(与rtp差不多,只不过封包方式不一样而已,整个逻辑还是一样,发送socket与接收端口都一样)。

播放器

播放器支持传递canvas、videos、div,然后底下根据参数选择可播放的元素。

- 如果不是H265流用videos播放(调用原生api)

- 如果是H265&&支持webcodes用videos播放(调用原生api)

- 如果是H265&&不支持webcodes用canvas播放(调用webgl显示)

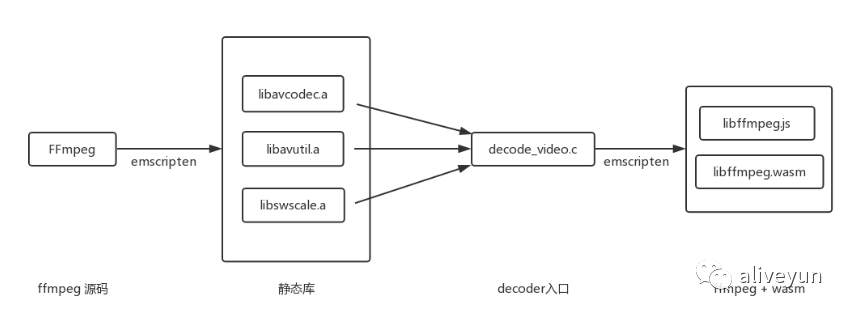

1、软解使用

ffmpeg编译成wasm进行调用。编译,命令:

mkdir goldvideo

cd goldvideo

git clone https://git.ffmpeg.org/ffmpeg.git

cd ..

git clone http://github.com/goldvideo/decoder_wasm.git

cd decoder_wasm

目录结构:

├─goldvideo

│ ├─ffmpeg

│ ├─decoder_wasm

替换decode_video.c代码decode_video.c

/**

* @file

* video decoding with libavcodec API example

*

* decode_video.c

*/

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

typedef void (*VideoCallback)(unsigned char *data_y, unsigned char *data_u, unsigned char *data_v, int line1, int line2, int line3, int width, int height, long pts);

#include <libavcodec/avcodec.h>

#define INBUF_SIZE 4096

#define AVPACKET_FLAG_KEY 0x01

typedef enum ErrorCode

{

kErrorCode_Success = 0,

kErrorCode_Invalid_Param,

kErrorCode_Invalid_State,

kErrorCode_Invalid_Data,

kErrorCode_Invalid_Format,

kErrorCode_NULL_Pointer,

kErrorCode_Open_File_Error,

kErrorCode_Eof,

kErrorCode_FFmpeg_Error

} ErrorCode;

typedef enum LogLevel

{

kLogLevel_None, // Not logging.

kLogLevel_Core, // Only logging core module(without ffmpeg).

kLogLevel_All // Logging all, with ffmpeg.

} LogLevel;

typedef enum DecoderType

{

kDecoderType_H264,

kDecoderType_H265

} DecoderType;

LogLevel logLevel = kLogLevel_None;

DecoderType decoderType = kDecoderType_H265;

void simpleLog(const char *format, ...)

{

if (logLevel == kLogLevel_None)

{

return;

}

char szBuffer[1024] = {0};

char szTime[32] = {0};

char *p = NULL;

int prefixLength = 0;

const char *tag = "Core";

prefixLength = sprintf(szBuffer, "[%s][%s][DT] ", szTime, tag);

p = szBuffer + prefixLength;

if (1)

{

va_list ap;

va_start(ap, format);

vsnprintf(p, 1024 - prefixLength, format, ap);

va_end(ap);

}

printf("%s\n", szBuffer);

}

void ffmpegLogCallback(void *ptr, int level, const char *fmt, va_list vl)

{

static int printPrefix = 1;

static int count = 0;

static char prev[1024] = {0};

char line[1024] = {0};

static int is_atty;

AVClass *avc = ptr ? *(AVClass **)ptr : NULL;

if (level > AV_LOG_DEBUG)

{

return;

}

line[0] = 0;

if (printPrefix && avc)

{

if (avc->parent_log_context_offset)

{

AVClass **parent = *(AVClass ***)(((uint8_t *)ptr) + avc->parent_log_context_offset);

if (parent && *parent)

{

snprintf(line, sizeof(line), "[%s @ %p] ", (*parent)->item_name(parent), parent);

}

}

snprintf(line + strlen(line), sizeof(line) - strlen(line), "[%s @ %p] ", avc->item_name(ptr), ptr);

}

vsnprintf(line + strlen(line), sizeof(line) - strlen(line), fmt, vl);

line[strlen(line) + 1] = 0;

simpleLog("%s", line);

}

VideoCallback videoCallback = NULL;

static ErrorCode decode(AVCodecContext *dec_ctx, AVPacket *pkt, AVFrame *outFrame)

{

ErrorCode res = kErrorCode_Success;

char buf[1024];

int ret;

ret = avcodec_send_packet(dec_ctx, pkt);

if (ret < 0)

{

simpleLog("Error sending a packet for decoding\n");

res = kErrorCode_FFmpeg_Error;

}

else

{

while (ret >= 0)

{

ret = avcodec_receive_frame(dec_ctx, outFrame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)

{

break;

}

else if (ret < 0)

{

simpleLog("Error during decoding\n");

res = kErrorCode_FFmpeg_Error;

break;

}

videoCallback(outFrame->data[0], outFrame->data[1], outFrame->data[2], outFrame->linesize[0], outFrame->linesize[1], outFrame->linesize[2], outFrame->width, outFrame->height, outFrame->pts);

}

}

return res;

}

int isInit = 0;

const AVCodec *codec;

AVCodecContext *c = NULL;

AVPacket *pkt;

AVFrame *frame;

AVFrame *outFrame;

ErrorCode openDecoder(int codecType, long callback, int logLv)

{

ErrorCode ret = kErrorCode_Success;

do

{

simpleLog("Initialize decoder.");

if (isInit != 0)

{

break;

}

decoderType = codecType;

logLevel = logLv;

av_log_set_level(AV_LOG_FATAL);

if (logLevel == kLogLevel_All)

{

av_log_set_callback(ffmpegLogCallback);

}

/* find the video decoder */

if (decoderType == kDecoderType_H264)

{

codec = avcodec_find_decoder(AV_CODEC_ID_H264);

}

else

{

codec = avcodec_find_decoder(AV_CODEC_ID_H265);

}

if (!codec)

{

simpleLog("Codec not found\n");

ret = kErrorCode_FFmpeg_Error;

break;

}

c = avcodec_alloc_context3(codec);

if (!c)

{

simpleLog("Could not allocate video codec context\n");

ret = kErrorCode_FFmpeg_Error;

break;

}

if (avcodec_open2(c, codec, NULL) < 0)

{

simpleLog("Could not open codec\n");

ret = kErrorCode_FFmpeg_Error;

break;

}

frame = av_frame_alloc();

if (!frame)

{

simpleLog("Could not allocate video frame\n");

ret = kErrorCode_FFmpeg_Error;

break;

}

outFrame = av_frame_alloc();

if (!outFrame)

{

simpleLog("Could not allocate video frame\n");

ret = kErrorCode_FFmpeg_Error;

break;

}

pkt = av_packet_alloc();

if (!pkt)

{

simpleLog("Could not allocate video packet\n");

ret = kErrorCode_FFmpeg_Error;

break;

}

videoCallback = (VideoCallback)callback;

} while (0);

simpleLog("Decoder initialized %d.", ret);

return ret;

}

ErrorCode decodeData(unsigned char *data, size_t data_size, long pts, int flag)

{

ErrorCode ret = kErrorCode_Success;

AVPacket pkt2 = {0};

pkt2.data = data;

pkt2.size = data_size;

pkt2.pts = pts;

pkt2.dts = pts;

pkt2.flags = (flag & AVPACKET_FLAG_KEY) ? AV_PKT_FLAG_KEY : 0;

pkt2.stream_index = 0;

ret = decode(c, &pkt2, frame);

av_packet_unref(&pkt2);

return ret;

}

ErrorCode flushDecoder()

{

ErrorCode ret = kErrorCode_Success;

return ret;

}

ErrorCode closeDecoder()

{

ErrorCode ret = kErrorCode_Success;

do

{

if (c != NULL)

{

avcodec_free_context(&c);

simpleLog("Video codec context closed.");

}

if (frame != NULL)

{

av_frame_free(&frame);

}

if (pkt != NULL)

{

av_packet_free(&pkt);

}

if (outFrame != NULL)

{

av_frame_free(&outFrame);

}

simpleLog("All buffer released.");

} while (0);

return ret;

}

int main(int argc, char **argv)

{

return 0;

}build_decoder_265.sh

echo "Beginning Build:"

rm -r ffmpeg

mkdir -p ffmpeg

cd ../ffmpeg

make clean

emconfigure ./configure --cc="emcc" --cxx="em++" --ar="emar" --prefix=$(pwd)/../decoder_wasm/ffmpeg --enable-cross-compile --target-os=none --arch=x86_64 --cpu=generic \

--enable-gpl --enable-version3 --disable-avdevice --disable-avformat --disable-swresample --disable-postproc --disable-avfilter \

--disable-programs --disable-logging --disable-everything \

--disable-ffplay --disable-ffprobe --disable-asm --disable-doc --disable-devices --disable-network \

--disable-hwaccels --disable-parsers --disable-bsfs --disable-debug --disable-protocols --disable-indevs --disable-outdevs \

--enable-decoder=hevc --enable-parser=hevc

make

make install

cd ../decoder_wasm

./build_decoder_wasm.shbuild_decoder_wasm.sh

rm -rf dist/libffmpeg.wasm dist/libffmpeg.js

export TOTAL_MEMORY=67108864

export EXPORTED_FUNCTIONS="[ \

'_openDecoder', \

'_flushDecoder', \

'_closeDecoder', \

'_decodeData', \

'_main'

]"

echo "Running Emscripten..."

emcc decode_video.c ffmpeg/lib/libavcodec.a ffmpeg/lib/libavutil.a ffmpeg/lib/libswscale.a \

-O2 \

-I "ffmpeg/include" \

-s WASM=1 \

-s TOTAL_MEMORY=${TOTAL_MEMORY} \

-s EXPORTED_FUNCTIONS="${EXPORTED_FUNCTIONS}" \

-s EXTRA_EXPORTED_RUNTIME_METHODS="['addFunction']" \

-s RESERVED_FUNCTION_POINTERS=14 \

-s FORCE_FILESYSTEM=1 \

-o dist/libffmpeg.js

echo "Finished Build"

2、软解显示

里面只要用了yuv-canvas、yuv-buffer:

drawFrame(data) {

let st = Date.now()

let { buf_y, buf_u, buf_v, width, height, stride_y, stride_u, stride_v } = data

let y, u, v, format, frameDisplay

let width_y = width

let height_y = height

let width_u = width_y / 2

let height_u = height_y / 2

y = {

bytes: buf_y,

stride: stride_y

}

u = {

bytes: buf_u,

stride: stride_u

}

v = {

bytes: buf_v,

stride: stride_v

}

format = yuvBuffer.format({

width: width_y,

height: height_y,

chromaWidth: width_u,

chromaHeight: height_u

})

frameDisplay = yuvBuffer.frame(format, y, u, v)

this.render.drawFrame(frameDisplay)

}3、硬解

判断浏览器是否支持硬解:

static isSupported(codec) {

//const browserInfo = getBrowser();

return (window.MediaSource && window.MediaSource.isTypeSupported(codec) && (location.protocol === 'https:' || location.hostname === 'localhost'));

}webcodecs.js

import Config from "./config.js";

export default class WebcodecsDecoder {

constructor(decode) {

this.decode = decode

this.adecoder_ = null;

this.vdecoder_ = null;

this.pc_ = null;

this.datachannel_ = null;

this.channelOpen_ = false;

this.waitKeyframe_ = true;

}

openDecode() {

// VideoDecoder config

this.vdecoder_ = new VideoDecoder({

output: this.handleVideoDecoded.bind(this),

error: (error) => {

console.error("video decoder " + error);

}

});

const config = {

// codec: 'avc1.42002a', //hev1.1.6.L123.b0

codec: 'hev1.1.6.L93.B0',

codedWidth: 1280,

codedHeight: 720,

hardwareAcceleration: 'no-preference',

// bitrate: 8_000_000, // 8 Mbps

// framerate: 30,

};

this.vdecoder_.configure(config);

// AudioDecoder config

this.adecoder_ = new AudioDecoder({

output: this.handleAudioDecoded.bind(this),

error: (error) => {

console.error("audio decoder " + error);

}

});

this.adecoder_.configure({ codec: 'opus', numberOfChannels: 2, sampleRate: 48000 });

}

//裸数据进来

//data.data, data.pts, data.flag

decodeData(data) {

if (this.waitKeyframe_) {

if (data.flag === 0) {

return;

}

this.waitKeyframe_ = false;

console.log('got first keyframe' + Date.now());

}

const chunk = new EncodedVideoChunk({

timestamp: data.pts,

type: data.flag ? 'key' : 'delta',

data: data.data

});

try {

this.vdecoder_.decode(chunk);

} catch (e) {

this.closeDecode()

this.openDecode();

console.log('Webcodecs 00', 'VideoDecoder', e)

}

}

async handleVideoDecoded(frame) {

self.postMessage({

type: "decoded",

data: frame,

});

setTimeout(function () {

if (frame.close) {

frame.close()

} else {

frame.destroy()

}

}, 100)

}

async handleAudioDecoded(frame) {

// this.awriter_.write(frame);

}

closeDecode() {

if (this.vdecoder_) {

// this.vdecoder_.flush();

this.vdecoder_.close();

this.vdecoder_ = null;

}

if (this.adecoder_) {

//this.adecoder_.flush()

this.adecoder_.close();

this.adecoder_ = null;

}

this.waitKeyframe_ = true;

}

}服务器

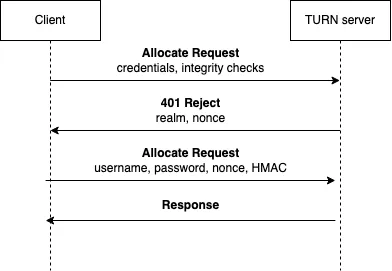

1、sctp封包

sctp只要引用了juans里面的sctp.h、sctp.c文件.注:usrsctp提供SCTP的UDP封装,但我们需要这些消息封装在DTLS中,并由ice实际发送/接收,而不是由usrsctp本身……因此,我们使用AF_CONN方法

int ice_transport_send_sctp(struct ice_transport_t* avt, int streamid, uint64_t time, int flags ,const void* data, int bytes)

{

//return ice_agent_send(avt->ice, (uint8_t)0, (uint16_t)1, data, bytes);

int n = RTP_FIXED_HEADER + bytes;

unsigned char* ptr = (uint8_t*)calloc( 1,n+1);

rtp_header_t header;

memset(&header,0,sizeof(rtp_header_t));

header.timestamp = time;

header.v = RTP_VERSION;

header.p = flags;

header.pt = 100;

header.ssrc = bytes;

nbo_write_rtp_header(ptr, &header);

memcpy(ptr + RTP_FIXED_HEADER, data, bytes);

int r=janus_dtls_wrap_sctp_data(avt->dtls, "doc-datachannel", "udp",0, ptr, n);

//janus_dtls_wrap_sctp_data(avt->dtls, "doc-datachannel", "udp", 0, data, bytes);

free(ptr);

//janus_dtls_send_sctp_data(avt->dtls, data, bytes);

return r;

}

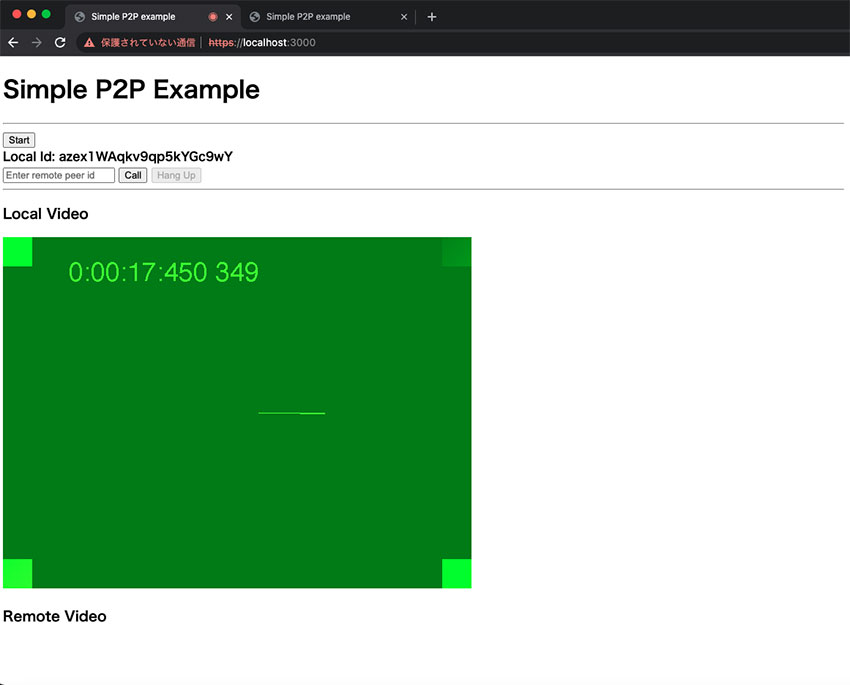

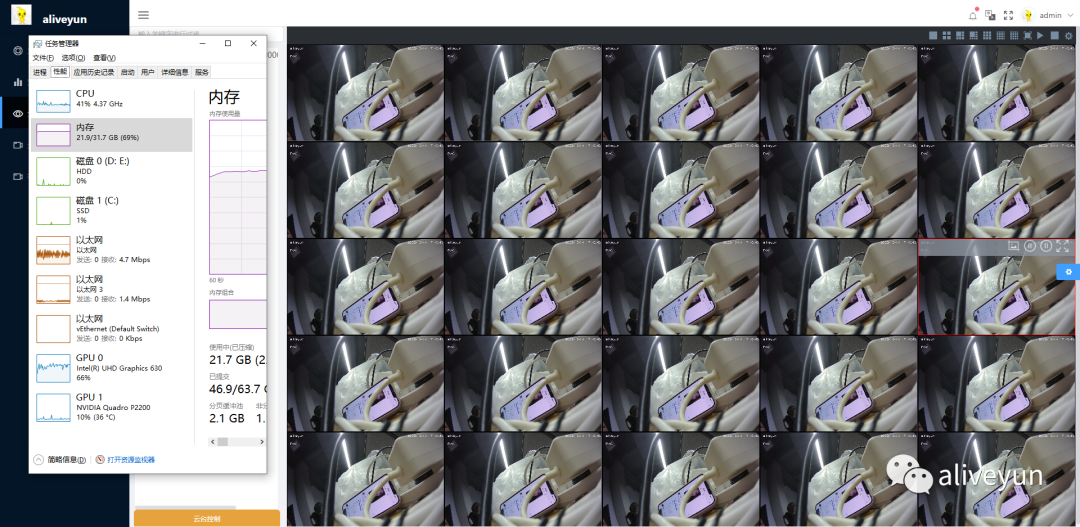

H265硬解效果图

服务端运行程序下载:https://gitee.com/aliveyun/HIMediaServer

作者:Aliveyun(公众号 aliveyun)

参考资料:

[1] github

[2] flv-h265.js、webrtc_H265player、juans

[3] C/C++面向WebAssembly编程

版权声明:本文内容转自互联网,本文观点仅代表作者本人。本站仅提供信息存储空间服务,所有权归原作者所有。如发现本站有涉嫌抄袭侵权/违法违规的内容, 请发送邮件至1393616908@qq.com 举报,一经查实,本站将立刻删除。